Funktionale Programmierung und Verifikation (IN0003), WS 2019/20

Competition

Each exercise sheet contains a magnificiently written competition exercise by the Master of Competition Senior, Herrn MC Sr. Eberl, or Master of Competition Junior, Herrn MC Jr. Kappelmann. These exercises count just like any other homework, but are also part of the official competition – overseen and marked by the MC Sr. The grading scheme for these exercises will vary: performance, minimality or beauty are just some factors the MC Sr. adores. You might use any function from the following libraries for your solution: base, array, containers, unordered-containers, attoparsec, binary, bytestring, hashable, mtl, parsec, QuickCheck, syb, text, transformers

Each week, the top 30 of the week will be crowned and the top 30 of the term updated. The best 30 solutions will receive points: 30, 29, 28, and so on. The favourite solutions of the MC Sr. will be cherished and discussed on this website. At the end of the term, the best k students will be celebrated with tasteful trophies, where k is a natural number whose value still will be determined when time has come. No bonus points can be gained, but an indefinite amount of fun, respect, and some trophies!

Important: If you do not want your name to appear on the competition, you can choose to not participate by removing the {-WETT-}...{-TTEW-} tags when submitting your homework.

Outline:

- All-time Top 30

- Results for Week 1

- Results for Week 2

- Results for Week 3

- Results for Week 4

- Results for Week 5 (updated 26.12.2019)

- Results for Week 6

- Results for Week 7

- Results for Week 8

- Results for Week 9

- Results for Week 10

- Results for Week 12

- Results for Week 13

- What is a token?

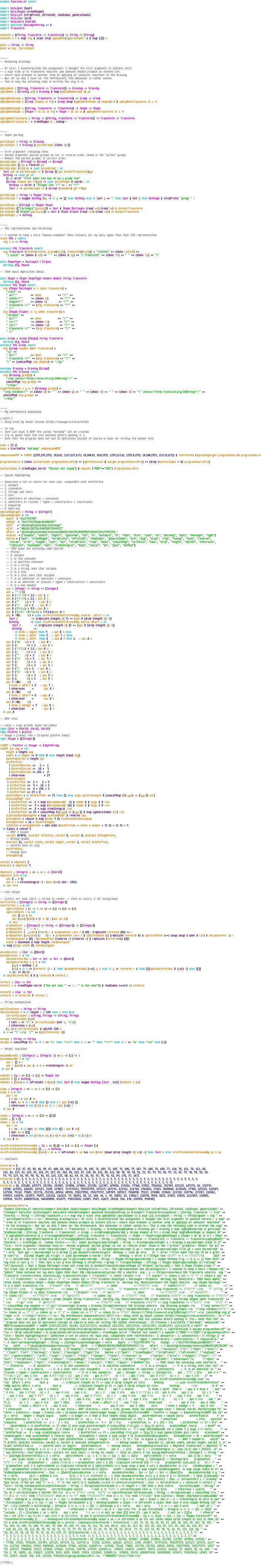

| Finale top 30 des Semesters | |||

|---|---|---|---|

| Platz | Wettbewerber*in | Punkte | |

| 1. | Simon Stieger | 315 | |

| 2. | Almo Sutedjo | 245 | |

| 3. | Marco Haucke | 216 | |

| 4. | Yi He | 194 | |

| 5. | Le Quan Nguyen | 187 | |

| 6. | Martin Fink | 184 | |

| 7. | Tal Zwick | 152 | |

| 8. | Bilel Ghorbel | 150 | |

| 9. | Johannes Bernhard Neubrand | 137 | |

| 10. | Anton Baumann | 122 | |

| 11. | Yecine Megdiche | 120 | |

| 11. | Torben Soennecken | 120 | |

| 13. | Tobias Hanl | 90 | |

| 14. | Kevin Schneider | 86 | |

| 15. | Omar Eldeeb | 84 | |

| 16. | Andreas Resch | 81 | |

| 17. | Kseniia Chernikova | 72 | |

| 18. | Timur Eke | 70 | |

| 19. | Jonas Lang | 69 | |

| 20. | Maisa Ben Salah | 66 | |

| 21. | Xiaolin Ma | 65 | |

| 22. | Janluka Janelidze | 62 | |

| 23. | Felix Rinderer | 61 | |

| 23. | Steffen Deusch | 61 | |

| 25. | Dominik Glöß | 59 | |

| 26. | Daniel Anton Karl von Kirschten | 56 | |

| 27. | Emil Suleymanov | 55 | |

| 27. | Mokhammad Naanaa | 55 | |

| 29. | Felix Trost | 54 | |

| 30. | David Maul | 48 | |

Die Ergebnisse der ersten Woche

| Top 30 der Woche | |||

|---|---|---|---|

| Platz | Wettbewerber*in | Tokens | Punkte |

| 1. | Simon Stieger | 21 | 30 |

| 2. | Almo Sutedjo | 22 | 20 |

| 3. | Florian von Wedelstedt | 23 | 15 |

| Marco Zielbauer | 23 | 15 | |

| Kevin Burton | 23 | 15 | |

| Maisa Ben Salah | 23 | 15 | |

| Andreas Resch | 23 | 15 | |

| Christo Wilken | 23 | 15 | |

| Robert Schmidt | 23 | 15 | |

| Timur Eke | 23 | 15 | |

| Christoph Reile | 23 | 15 | |

| Peter Wegmann | 23 | 15 | |

| Jonas Lang | 23 | 15 | |

| Kevin Schneider | 23 | 15 | |

| David Maul | 23 | 15 | |

| Torben Soennecken | 23 | 15 | |

| 17. | Martin Fink | 24 | 10 |

| David Huang | 24 | 10 | |

| Kseniia Chernikova | 24 | 10 | |

| Johannes Bernhard Neubrand | 24 | 10 | |

| Michael Plainer | 24 | 10 | |

| Anton Baumann | 24 | 10 | |

| Fabian Pröbstle | 24 | 10 | |

| Yi He | 24 | 10 | |

| 25. | Ryan Stanley Wilson | 25 | 5 |

| Leon Julius Zamel | 25 | 5 | |

| Mihail Stoian | 25 | 5 | |

| Pascal Ginter | 25 | 5 | |

| Markus R. | 25 | 5 | |

| Robin Brase | 25 | 5 | |

| Yecine Megdiche | 25 | 5 | |

| Bilel Ghorbel | 25 | 5 | |

| Leon Windheuser | 25 | 5 | |

| Nikolaos Sotirakis | 25 | 5 | |

| Christopher Szemzö | 25 | 5 | |

| Florian Melzig | 25 | 5 | |

(scoring scheme was changed from previous years because it seemed unfair in this instance)

The MC Sr bids you welcome to this year's Wettbewerb. This tradition was started by the MC emeritus Jasmin Blanchette in 2012. Much time has passed since then, and the MC emeritus is now a professor in Amsterdam, and his former MC Jr is now the MC Sr. Still, the Wettbewerb progresses more or less as always, mutatis mutandis, and the MC Sr will attempt to keep the old spirit alive. (If you are confused by the style of this blog, the fact the the MC refers to himself in the third person, or the odd mixture of German, English, and occasional other languages – that is also a legacy of the MC emeritus and changing it now would just feel wrong)

This week's problem was posed by new MC Jr Kevin Kappelmann, who seems to have an unhealthy obsession with century-old number theory. Well, the MC Sr supposes he shalt not judge (lest he be judged himself). The problem that was posed was to determine whether or not a given number is a quadratic residue for a given modulus n, i.e. if it has a square root in the residue ring ℤ/nℤ.

The MC Sr received 538 submissions. The evaluation was quite tedious for him and was made more tedious by the fact that a significant number of students chose to ignore the instructions on the exercise sheet that clearly stated that if one wishes to use auxiliary functions in one's solution for quadRes, all of them have to be within the {-WETT-} tags. Perhaps they were trying to trick the MC; perhaps they were just careless. The MC decided to be lenient this week and adjusted their solutions accordingly, but it turns out that after moving all the auxiliary functions into the tags, none of them were even remotely competetive anymore anyway.

In any case, let us talk about the solutions themselves: All of the correct ones that the MC looked at essentially followed the hint from the exercise sheet and simply tried all candidates. This is not surprising since nobody currently knows an algorithm that is substantially better than this – at least not (unless one already has a prime factorisation of the modulus), and this forms the basis for various cryptographic systems.

The longest correct solution, with 95 tokens, is this:

quadRes n a | n == 1 = True | a > n = quadRes n (a `mod` n) | a `mod` n == 0 || a `mod` n == 1 = True | a < 0 = quadRes n (a+n) | otherwise = aux n 1 a where aux n x a | equivMod n (a `mod` n)(x^2) == True = True | x == n-1 = False | otherwise = aux n (x+1) a

There is a lot of potential for optimisation here. The initial case distinctions are unnecessary, and the definition of the auxiliary function could easily be written in a single line with some logical connectives. Also, remember the Meta-Master Tobias Nipkow told you: never write if b == True!

A nice and much more streamlined version of the same basic approach can be found in the following 32-token solution by Jonas Jürß:

quadRes n a = find n where find i = i >= 0 && (i*i `mod` n == a `mod` n || find (i-1))

He does not have any unnecessary case distinctions and inlined the equivMod function. Moreover, he only used material that was covered in the first week of the lecture. The MC approves, but unfortunately this straightforward approach is not enough to land a spot among the Top 30 even in the very first week.

All the Top 30 students made use of lists one way or another. The obvious way was to use the list comprehensions introduced in the second week of the lecture. Here is one representative 24-token solution for this approach by Anton Baumann, which earned him a well-deserved 17th place:

quadRes n a = or [x^2 `mod` n == a `mod` n | x <- [0 .. n]]

Note that he cleverly checked candidates from 0 to n (as opposed to 0 to n - 1 as suggested on the exercise sheet). It doesn't hurt (because n ≡ 0 (mod n)) and it saves two tokens. With minor adjustments – such as using the elem function that returns whether a given value occurs in a list or not – one can save another token (solution by Marco Zielbauer):

quadRes n a = a `mod` n `elem` [x^2 `mod` n | x <- [0 .. n]]

A couple of students noticed that [0..n] is in fact just syntactic sugar for enumFromTo 0 n, which can, in some cases, save a few more tokens; cf. e.g. the following 22-token solution by Almo Sutedjo:

quadRes n q = or [x^2 `mod` n == mod q n| x <- enumFromTo 0 n]

Combining these two tricks, Simon Stieger found a 21-token solution, which is almost identical to the one the MC had found:

quadRes n a = a `mod` n `elem` [x*x `mod` n | x <- enumFromTo 1 n]

The nice thing about this solution is that it does not really use anything that was not covered in the lecture (other than perhaps the use of enumFromTo function).

However, one of our tutors – Herr Großer – went above and beyond and managed to beat the MC's solution with 20 tokens:

quadRes n = flip any (join timesInteger <$> enumFromTo 0 n) . on eqInteger (`mod` n)

There's a lot going on here and you are not expected or required to understand any of this. The MC will, however, do his best to explain it briefly:

- timesInteger from GHC.Num is just another name for (*) on integers (i.e. multiplication). Similarly, eqInteger is just equality on integers.

- For a two-parameter function f, join f is a somewhat cryptic way to write the function that takes an input x and returns f x x. In other words: join timesInteger is a shorter way to write (^ 2).

- The . operator is function composition (usually written as ∘ in mathematics).

- on is a nice little operator from Data.Function defined as on f g x y = f (g x) (g y). It allows you to write our equivMod function as equivMod n = (==) `on` (`mod` n) (two numbers are congruent if they are equal after reducing them modulo n).

- <$> is another way to write the map function (which we will learn about in a few weeks) as an infix operator.

If we deobfuscate Herr Großer's solution a little bit, we get something like this:

quadRes n a = any (\y -> a `mod` n == y `mod` n) [x * x | x <- [0..n]]

His tricks only make things shorter because timesInteger exists. Taking a hint from current world affairs, the MC had imposed severe restrictions on imports this year and thought this would be enough to preclude such trickery with timesInteger, eqInteger, etc. Alas, it seems that he was mistaken. Kudos to Herr Großer for finding this!

All in all, this week's solutions were perhaps not very surprising. The MC hopes that the following week's exercise will leave more room for his students' creativity to shine.

So, what are this week's lessons?

- The MC really has to streamline his competition evaluation process. Sorting through the hundreds upon hundreds of submissions with his collection of 7-year old half-rotten arcane Perl and Bash scripts did not work very well.

- Simple exercises can lead to a surprising variety of solutions, but not always.

- Things that pay off when minimising the number of tokens:

- inlining (eschewing auxiliary functions)

- a straightfoward simple solution

- twiddling with infix operators

- knowing the library functions

- arcane lore (cf. Herr Großer)

Die Ergebnisse der zweiten Woche

| Top 30 der Woche | |||

|---|---|---|---|

| Platz | Wettbewerber*in | Tokens | Punkte |

| 1. | Marco Haucke | 37 | 30 |

| 2. | Robin Brase | 40 | 29 |

| 3. | Almo Sutedjo | 41 | 28 |

| 4. | Timur Eke | 41 | 27 |

| 5. | Simon Stieger | 42 | 26 |

| 6. | Andreas Resch | 43 | 25 |

| Martin Fink | 43 | 25 | |

| 8. | Le Quan Nguyen | 45 | 23 |

| 9. | Janluka Janelidze | 46 | 22 |

| Dominik Glöß | 46 | 22 | |

| Kseniia Chernikova | 46 | 22 | |

| 12. | Anton Baumann | 47 | 19 |

| Bilel Ghorbel | 47 | 19 | |

| Torben Soennecken | 47 | 19 | |

| Maisa Ben Salah | 47 | 19 | |

| 16. | Oleksandr Golovnya | 48 | 15 |

| Markus Edinger | 48 | 15 | |

| Nikolaos Sotirakis | 48 | 15 | |

| Kristina Magnussen | 48 | 15 | |

| 20. | Achref Aloui | 49 | 11 |

| Tobias Hanl | 49 | 11 | |

| 22. | Yi He | 49 | 9 |

| 23. | Florian Melzig | 50 | 8 |

| 24. | Yu Han Li | 50 | 7 |

| Roman Heinrich Mayr | 50 | 7 | |

| Fabian Pröbstle | 50 | 7 | |

| Steffen Deusch | 50 | 7 | |

| 28. | Maria Pospelova | 51 | 3 |

| 29. | David Maul | 51 | 2 |

| Kevin Burton | 51 | 2 | |

This week, the MC Sr received 156 (apparently) correct student submissions with correctly placed {-WETT-} tags. It seems that placing the tags is very difficult, and the MC grew so annoyed ploughing through submission after submission with incorrectly placed tags that he chose to do away with the MC emeritus's Perl scripts and instead automate the entire evaluation process using Haskell (whose suitability as a scripting language is greatly underappreciated, in the MC's opinion).

In any case, the variety among the solutions was still not too great this week, but already a bit greater than in the previous one. The longest submission had 254 tokens:

bernoulli :: Integer -> Rational bernoulli x = helper 0 x (fromIntegral 1) where helper k n s | n == 0 = (fromInteger 1) | k == 0 = if k== (n-1) then (fromIntegral (n `choose` k)) * ( s / (fromIntegral (k - n - 1))) else (fromIntegral (n `choose` k)) * ( s / (fromIntegral (k - n - 1))) + (helper (k+1) n ((fromIntegral (n `choose` k)) * ( s / (fromIntegral (k - n - 1))))) | k == (n-1) = ((fromIntegral (n `choose` k)) * ((helper 0 k 1 ) / (fromIntegral(k - n - 1)))) | k < (n-1) = ((fromIntegral (n `choose` k)) * ((helper 0 k 1 ) / (fromIntegral(k - n - 1)))) + (helper (k+1) n ((fromIntegral (n `choose` k)) * ( s / (fromIntegral (k - n - 1)))))

Clearly, the author was not optimising for number of tokens. That is fine – tokens are a silly measure after all – but nevertheless, this solution is somewhat needlessly complicated. The more complicated a solution is, the more likely it is that it will contain hard-to-spot bugs. Needlessly complicated code also tends to be quite hard to maintain.

Consider, in contrast, the following 55-token solution by Herr Rinderer (which is representative of many similar solutions by other students):

n `choose` k = product [n-k+1..n] `div` product [1..k] bernoulli 0 = 1 bernoulli n = sum [n `choose` k % (k - n - 1) * bernoulli k | k <- [0 .. n - 1]]

This is much nicer: It is short, concise, and – given the recurrence from the problem sheet – obviously correct. This is more or less exactly the solution the MC would have written if the number of tokens were of no consequence.

However, for the sake of the competition, the number of tokens is of consequence, and 55 tokens was not enough to secure a spot among the Top 30 this week. So what can be done to shrink this? Well, look at the following 43-token solution by Herr Resch:

bernoulli 0 = 1 bernoulli n = sum [ product (succ k `enumFromTo` n) `div` product [1..n-k] % (pred k - n) * bernoulli k | k <- 0 `enumFromTo` pred n]

He achieved a respectable 6th place by employing several simple tricks to great effect:

- inlining the definition of choose

- enumFromTo instead of the [a..b] syntax

- succ k and pred k instead of k + 1 and k - 1

- infix notation like `enumFromTo` to save parentheses around function arguments

This is almost as far as one can stretch the basic approach following the recurrence from the problem sheet, but we are not quite there yet. The MC Sr managed to get down to 42 tokens using the following approach:

f = on div product bernoulli m = 0 ^ m - sum [enumFromTo l m `f` enumFromTo 1 k % l * bernoulli k | k <- 0 `enumFromTo` pred m, let l = succ m - k]

This eliminates the case distinction for n = 0 by using the fact that 0 ^ 0 = 1 in Haskell. Herr Eke even managed to get to 41 tokens like this:

bernoulli 0 = 1 bernoulli n = sum [genericLength (elemIndices k . map genericLength . subsequences . genericReplicate n $ 0) % succ n `subtract` k * bernoulli k| k <- enumFromTo 0 $ pred n]

The trick he uses here is that the binomial coefficient  counts the number of k-element subsets of a set with n elements. He therefore uses functions from Haskell's list library to generate a list of n zeroes, then constructing the list of all 2n subsequences of that list, retrieves all those positions in that list that contain a list of length k, and counts the length of the remaining list. This is, of course, horribly inefficient, but since we are primality counting tokens this week, that is perfectly fine. This approach could easily be brought down to 40 tokens, but as far as the MC Sr knows, that is as good as it gets with this approach.

counts the number of k-element subsets of a set with n elements. He therefore uses functions from Haskell's list library to generate a list of n zeroes, then constructing the list of all 2n subsequences of that list, retrieves all those positions in that list that contain a list of length k, and counts the length of the remaining list. This is, of course, horribly inefficient, but since we are primality counting tokens this week, that is perfectly fine. This approach could easily be brought down to 40 tokens, but as far as the MC Sr knows, that is as good as it gets with this approach.

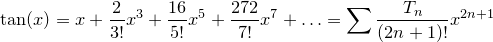

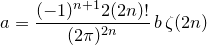

Simon Stieger found the following nice 42-token solution using the explicit formula

for the Bernoulli numbers:

bernoulli n = sum [product [negate k..pred j-k] `div` product [1..j] * j^n % succ k | k <- enumFromTo 0 n, j <- enumFromTo 0 k]

The Top 3 all used an algorithm based on the Akiyama–Tanigawa transform, cf. the following winning 37-token solution by Herr Haucke:

atCell m n = if n == 0 then recip a else a * atCell m b `subtract` atCell a b where a=m+1;b=n-1 bernoulli = atCell 0

Simply put, it works like this: We start with the sequence 1, 1/2, 1/3, 1/4, …. Call this sequence a0. We then form a new sequence of numbers from this by subtracting every number from its predecessor and multiplying the result with the position of the number in the sequence, i.e. ai+1, j = (j+1)(ai,j - ai,j+1). We iterate this process, which gives us a big infinite rectangle of numbers. The Bernoulli numbers are simply the leftmost column of that rectangle. The following is a more readable implementation of the Akiyama–Tanigawa algorithm that might make it a bit more clear how it works:

akiyamaTanigawa :: Num a => [a] -> [[a]] akiyamaTanigawa xs = iterate step xs where step xs = zipWith3 (\x y i -> fromInteger i * (y - x)) xs (tail xs) [1..] bernoullis :: [Rational] bernoullis = [head row | row <- akiyamaTanigawa [recip n | n <- [1..]]] bernoulli :: Integer -> Rational bernoulli n = genericIndex bernoullis n

Lastly, the following is the MC's 26-token version of this:

bernoulli = genericIndex $ map head $ iterate ((*) `zipWith` enumFrom 1 <<< zipWith subtract `ap` tail) $ recip `map` enumFrom 1

Herr Haucke, our winner this week, had complained on Piazza about the MC's choice of -½ for B1 instead of ½. He ended up in the first place in the end anyway, but just to prove that the MC Sr can also handle this alternative definition, here is another 26-token solution that produces B1 = ½.

bernoulli = genericIndex $ map head $ iterate ((*) `zipWith` enumFrom 1 <<< zipWith subtract =<< tail) $ recip `map` enumFrom 1

You are not expected to understand what is going on here and the MC will not even attempt to explain it. For the curious: This uses the reader monad.

What have we learned this week?

- Placing {-WETT-} tags is hard.

- There are quite a few different ways to compute Bernoulli numbers, but we have yet to find one that is shorter than the Akiyama–Tanigawa transform.

- Don't look at solutions from the token-counting part of the Wettbewerb for advice on how to write readable Haskell.

Die Ergebnisse der dritten Woche

| Top 30 der Woche | |||

|---|---|---|---|

| Platz | Wettbewerber*in | Performance | Punkte |

| 1. | Kseniia Chernikova | 5.6 | 30 |

| 2. | Wolf Birger Thieme | 5.4 | 28 |

| 3. | Simon Stieger | 5.6 | 26 |

| 4. | Yecine Megdiche | 4.6 | 24 |

| 5. | Almo Sutedjo | 4.5 | 23 |

| 6. | Marco Haucke | 4.4 | 22 |

| 7. | Timur Eke | 3.6 | 18 |

| 8. | Le Quan Nguyen | 3.5 | 17 |

| Xiaolin Ma | 3.5 | 17 | |

| Guillaume Gruhlke | 3.5 | 17 | |

| Stefan Wölfert | 3.5 | 17 | |

| David Maul | 3.5 | 17 | |

| 13. | Robin Brase | 3.1 | 13 |

| 14. | Anna Franziska Horne | 2.6 | 12 |

| 15. | Chien-Hao Chiu | 2.5 | 11 |

| 16. | Torben Soennecken | 1.6 | 6 |

| Johannes Stöhr | 1.6 | 6 | |

| Philipp Steininger | 1.6 | 6 | |

This week, the MC Sr was very disappointed. He received only eighteen submissions in total, even though the problem that was posed was the exact one as in the previous week. This means that 16 students could simply have submitted their solution from last week (or the sample solution for that matter) and have gotten into the Top 30. That's at least 105 unclaimed free Wettbewerbspunkte! Additionally, three competitors are very lucky he received points at all: one of them called his file Excercise_3.hs by mistake, so it failed to compile. Two others forgot to include the choose functions in their {-WETT-} tags. The MC, however, noticed this and graciously decided to include it in the evaluation anyway. (It is not impossible that a few more submissions fell through the cracks due to things like this – if yours is one of them, feel free to email the MC Sr and he will see what he can do)

To make up for the unfortunate lack of submissions, the MC Sr contributed four submissions of his own. These are, of course, außer Konkurrenz. To the MC Sr's delight, the 16 solutions he did receive contained a refreshing amount of variety and he will do his best to showcase all of it in this entry.

Since the efficiency of the different submissions varies greatly, the MC Sr decided to do several phases of elimination. In each phase, a particular imput n is fixed so that a clear separation between the fast and the slow submissions can be seen. The slower ones are then eliminated and we proceed to the next phase. The performance rating is comprised of two numbers a.b, where the major performance indicator a is the number of phases survived (which typically corresponds to a better asymptotic running time complexity) and b indicates the ranking within a single major class (which probably indicates a better constant factor). The final score is then computed as 6a + b.

In the first phase, we pick n = 24 to see if the solutions work at all:

| Performance evaluation: n = 24 | ||

|---|---|---|

| Name | Time | |

| Kseniia Chernikova | 0. | 0013 s |

| MC Sr (fast) | 0. | 0013 s |

| Simon Stieger | 0. | 0014 s |

| MC Sr (Akiyama–Tanigawa) | 0. | 0014 s |

| MC Sr | 0. | 0014 s |

| Wolf Birger Thieme | 0. | 0015 s |

| Yecine Megdiche | 0. | 0015 s |

| Le Quan Nguyen | 0. | 0016 s |

| Timur Eke | 0. | 0016 s |

| MC Sr (naïve memoised) | 0. | 0016 s |

| Almo Sutedjo | 0. | 0016 s |

| Xiaolin Ma | 0. | 0016 s |

| Markus Großer (tutor) | 0. | 0017 s |

| Stefan Wölfert | 0. | 0017 s |

| David Maul | 0. | 0018 s |

| Guillaume Gruhlke | 0. | 0018 s |

| Robin Brase | 0. | 0019 s |

| Marco Haucke | 0. | 0019 s |

| MC Sr (power series) | 0. | 0038 s |

| Anna Franziska Horne | 0. | 0054 s |

| Chien-Hao Chiu | 0. | 030 s |

| Johannes Stöhr | 4. | 9 s |

| Philipp Steininger | 5. | 1 s |

| Torben Soennecken | 5. | 2 s |

We must already bid three participants farewell. All of them did a straightforward implementation of the recursive formula from the problem sheet (much like the sample solution from last week) and simply cannot compete even for relatively small inputs. The reason for this is that in order to compute Bn, the formula requires the values of all Bi for i < n and values that are computed are never stored, but recomputed every time, leading to exponential running time.

Next, we go up to n = 50:

| Performance evaluation: n = 50 | ||

|---|---|---|

| Name | Time | |

| Kseniia Chernikova | 0. | 0013 s |

| MC Sr (fast) | 0. | 0013 s |

| MC Sr | 0. | 0016 s |

| MC Sr (power series) | 0. | 0017 s |

| Simon Stieger | 0. | 0018 s |

| Wolf Birger Thieme | 0. | 0019 s |

| Markus Großer (tutor) | 0. | 0019 s |

| MC Sr (Akiyama–Tanigawa) | 0. | 0023 s |

| Yecine Megdiche | 0. | 0024 s |

| Timur Eke | 0. | 0030 s |

| Almo Sutedjo | 0. | 0036 s |

| MC Sr (naïve memoised) | 0. | 0037 s |

| Stefan Wölfert | 0. | 0038 s |

| Xiaolin Ma | 0. | 0041 s |

| Guillaume Gruhlke | 0. | 0047 s |

| Robin Brase | 0. | 0047 s |

| Le Quan Nguyen | 0. | 0047 s |

| Marco Haucke | 0. | 0049 s |

| David Maul | 0. | 010 s |

| Anna Franziska Horne | 29. | 7 s |

| Chien-Hao Chiu | 92. | 8 s |

Again, we must say good-bye to two competitors whose solution is clearly much slower than all the others. These two also implemented the straightforward exponential recurrence from the exercise sheet, but they additionally realised that Bn = 0 for any odd n greater than 1 and added a corresponding case distinction. Frau Horne additionally implemented a slightly more efficient computation of the choose function for computing binomial coefficients.

Their solutions are, of course, still exponential, but these improvements, so for slightly larger inputs, the running time still skyrockets, which is what we see in the above comparison.

For the next phase, we have to go up all the way to n=1000 to see an appreciable difference between the solutions:

| Performance evaluation: n = 1000 | ||

|---|---|---|

| Name | Time | |

| MC Sr (fast) | 0. | 040 s |

| MC Sr | 0. | 049 s |

| Markus Großer (tutor) | 0. | 14 s |

| MC Sr (power series) | 0. | 15 s |

| Kseniia Chernikova | 0. | 42 s |

| Simon Stieger | 0. | 51 s |

| Wolf Birger Thieme | 0. | 69 s |

| Yecine Megdiche | 2. | 1 s |

| Almo Sutedjo | 5. | 2 s |

| MC Sr (Akiyama–Tanigawa) | 6. | 4 s |

| Marco Haucke | 8. | 6 s |

| Timur Eke | 13. | 3 s |

| Xiaolin Ma | 19. | 8 s |

| MC Sr (naïve memoised) | 20. | 4 s |

| Guillaume Gruhlke | 21. | 4 s |

| Le Quan Nguyen | 22. | 1 s |

| Stefan Wölfert | 22. | 7 s |

| David Maul | 24. | 3 s |

| Robin Brase | 36. | 8 s |

Let's focus on the solutions in the lower half of the table. They now show a bit more variety:

- Herr Le Quan and Herr Ma * used a memoisation approach that remembers all the Bernoulli numbers computed so far to avoid unnecessary recomputations. The MC Sr also included a more streamlined version of this in the evaluation, which is referred to as MC Sr (memoised) above.

- Herr Brase implemented the Akiyama–Tanigawa algorithm (which had already served him, the MC Sr, and some of his competitors well in the previous week). Curiously, Herr Brase's implementation is considerably slower than the MC Sr's implementation of the exact same algorithm. The reason for this is that Herr Brase uses 1 % n to create the sequence 1, 1/2, 1/3, 1/4, … whereas the MC Sr uses the function recip :: Rational → Rational. The former introduces an unnecessary GCD computation (since % does not know, a priori, that numerator and denominator are on lowest terms), which apparently slows things down quite a bit.

- The Herren Wölfert, Maul, Gruhlke, and Herr Eke all used the explicit formula already mentioned in last week's post. The latter does a bit better because he optimised the computation of the binomial coefficient a little bit.

- Interestingly, Herr Sutedjo and Herr Haucke (who are still in the race at this point) implement the same formula, but optimised it a bit more cleverly: Herr Sutedjo computes the binomial coefficients using the factorial function, which he additionally caches so that computing the binomial coefficient becomes much cheaper (using an ingenious functional data structure known as finger trees from the library). Herr Haucke computes the binomial coefficients using prime numbers, which seems to help quite a bit and is likely the reason why he survived this round. He also did a lot of other optimisations, the majority of which the MC Sr unfortunately believes did not actually improve performance but only made the code less readable.

- Herr Megdiche goes a step further and uses arrays instead of sequences, which of course provide constant-time access. He uses the formula from the exercise sheet and memoises both the values of Bn computed so far and the binomial coefficients in a beautiful mutual recursion – the function b uses the values from the array bernoullis, and the array bernoullis is filled with the results of the function b. This exploits the laziness of Haskell, and the memoisation combinators from the various Hackage libraries work in much the same way.

* The MC Sr is never quite sure which part of a Vietnamese or Chinese name to use to address someone. Feel free to correct him via email if he got it wrong! Of course, that goes for any other mistakes he makes as well, be it with names or otherwise.

All of these algorithms have polynomial complexity. However, we still see a significant change going to n = 2000:

| Performance evaluation: n = 2000 | ||

|---|---|---|

| Name | Time | |

| MC Sr (fast) | 0. | 37 s |

| MC Sr | 0. | 43 s |

| Markus Großer (tutor) | 0. | 58 s |

| MC Sr (power series) | 1. | 4 s |

| Kseniia Chernikova | 3. | 3 s |

| Simon Stieger | 3. | 5 s |

| Wolf Birger Thieme | 5. | 5 s |

| Yecine Megdiche | 23. | 6 s |

| MC Sr (Akiyama–Tanigawa) | 60. | 4 s |

| Almo Sutedjo | 61. | 7 s |

| Marco Haucke | 75. | 8 s |

The MC Sr's Akiyama–Tanigawa implementation (MC Sr (AT)) is finally kicked out of the race. Herr Sutedjo's and Herr Haucke's implementation of the explicit formula does not survive this round either, nor does Mr Megdiche's memoised naïve recurrence.

With that, we have arrived at the podium. For good measure, let's go over 9000 – to 10000, to be precise:

| Performance evaluation: n = 10000 | ||

|---|---|---|

| Name | Time | |

| Markus Großer (tutor) | 18. | 8 s |

| MC Sr (fast) | 36. | 7 s |

| MC Sr | 39. | 4 s |

| MC Sr (power series) | 169. | 5 s |

| Kseniia Chernikova | 400. | 4 s |

| Simon Stieger | 493. | 0 s |

| Wolf Birger Thieme | 984. | 1 s |

In this step, the last three remaining student submissions get kicked out. In any case, they provide results within a reasonable time for n = 5000. They work like this:

- Herr Thieme's algorithm is a bit tricky to understand. It seems to use Pascal's triangle to compute the binomial coefficients and the Bernoulli numbers at the same time, using memoisation. He does not use any fancy data structures to store the memoised values, but he does not have to – all lists are always traversed left-to-right, so there are no expensive accesses anyway.

- Herr Stieger uses Faulhaber's triangle. The ith row of this triangle contains the coefficients of the polynomial 1i + … + ni that was mentioned on the problem sheet. In particular, the first column are precisely the Bernoulli numbers! Unfortunately, Herr Stieger's code contains a lot of conspicuous similarities to the one on Rosetta code (including identical spaces and variable names). The MC Sr pondered whether to disqualify him for this or not, but ultimately decided only to deduct 4 points – in the end, Herr Stieger at least made the effort to hand in something this week and he did de-obfuscate the code he found quite a bit, which demonstrates that he understands how it works. That is definitely worth something!

- Frau Chernikova did not compute the Bernoulli numbers directly, but instead computed the Tangent numbers. These are the sequence Tn = 1, 2, 16, 272, 7936, … that appears in the Taylor series expansion

It turns out that it is very easy to compute Bn given Tn, so the remaining question is how to compute Tn. Frau Chernikova does this using Seidel's triangle, which goes from one line to the next by summing the values of the line above right-to-left, then left-to-right, then right-to-left etc. This pattern is also known as the Boustrophedon transform from the Greek βοῦς (ox) and στροφή (turn), because one traverses the triangle in the same way that an ox ploughs a field.

The advantage of going through the Tangent numbers is that they are integers and can easily be computed using only integer operations, whereas the other approaches used so far all used arithmetic on rational numbers, which tends to become very expensive due to frequent GCD computations.

The MC Sr's main implementation therefore also uses the Tangent numbers, but computes them with a more efficient (but still quite simple) algorithm given in Section 6.1 of this lovely paper. The implementation MC (fast) uses the same algorithm, but tries to be a bit more efficient by only using a single array with imperative updates. As you can see, the difference is minimal – as so often in Haskell, the elegant algorithm is basically fast enough, and only very little extra performance can be gained by making it ugly.

For the sake of comparison, an artisanal hand-crafted optimised C++ program by the MC Sr that uses the same approach as MC (fast) needs about 17 seconds to compute B10000. That is only about twice as fast as the Haskell code, which seems not too bad for Haskell.

There are much crazier algorithms to compute Bernoulli numbers than this. Here are three of the MC Sr's favourite ones:

- A consequence of the Von Staudt–Clausen Theorem is that the denominator of the Bernoulli number B2n is the product of all prime numbers that divide 2n, which is easy to compute (well, relatively speaking, compared to how huge Bernoulli numbers are). The numerator is a bit more difficult to find: It is known that

where ζ is the Riemann ζ function that was mentioned on the problem sheet. Now, let a be the numerator and b the denominator (which we already know). If we rearrange, we find:

This means that if we approximate the right-hand side to sufficient accuracy, we can simply round to the nearest integer and thus obtain a.

- A different approach (also described in this paper in Section 5) uses the tangent numbers again: Recall that the tangent numbers can, by definition, be read off from the Taylor series expansion of tan(x). If we are interested in the Tangent numbers T0…Tm, we can simply define T'n = (2m+1)! / (2n+1)! Tn, which are the coefficients of the Taylor expansion of (2m+1)tan(x) and, crucially, also integers. We can then approximate (2n+1)tan(10-p) for some sufficiently large p and read off the Tn! For instance, for m = 3 and p = 4, we get (2n+1)tan(10-p) = 0.5040 0000 1680 0000 0672 0000 0272, from which we can read off the values 5040, 1680, 672, 272. Converting these T'n back to Tn, we get 1, 2, 16, 272 as expected. With some tricks, all of this can be implemented entirely with integer arithmetic (of course, using powers of two instead of powers of ten); however, it is quite difficult to implement this efficiently because the numbers involved get absolutely huge – we're talking many millions of digits. The MC included a draft of an implementation of this as MC (power series), and it did okay.

- Our tutor Herr Großer went a bit overboard and implemented Harvey's multimodular algorithm that computes Bn modulo many different prime numbers (the MC is not quite sure how) and then uses the Chinese Remainder Theorem to reconstruct the actual value of Bn. This is probably the fastest known algorithm to date for large n, so it is not too surprising that Herr Großer's solution wins over the MC Sr's for n = 10000.

What have we learned this week?

- Exponential algorithms are not good, but not handing in a solution at all is much worse.

- Memoisation is a nice and straightforward way to gain performance.

- Doing a bit of research (e.g. googling) is good and can secure you a top place – but do not simply copy-paste code or you will make the MC grumpy. You do not want the MC to be grumpy. That goes for all the MCs you will encounter, but the MC Sr is the oldest and therefore already has a high baseline value of grumpiness.

- It is not quite obvious how to optimise in Haskell. The lecture will briefly talk about this, but probably not go into too much detail. As a quick primer:

- Haskell is lazy (if code doesn't get executed, it probably doesn't incur any cost, so eliminating it doesn't give you any benefit)

- However, large unevaluated thunks are usually bad because they consume a lot of memory, and a lot of memory means a lot of garbage collection.

- GHC is really good at optimising, so many ‘optimisations’ a beginner may try to do by hand will have next to no effect. In particular, operations on lists (map, filter, foldr, length, etc.) are usually fused, so attempting to pack all of that into a single tail-recursive function to avoid building the intermediate lists is commendable, but usually pointless.

- Efficient algorithms do not have to be long or ugly, especially in Haskell.

- Herr Großer has too much time on his hands.

By the way, the MC Sr is introducing a new feature next week: If you have any important comments for the MCs (e.g. an alternative solution that somehow does not make it through the test system), please include them in your submission like this to ensure they do not miss them:

{-MCCOMMENT Hello MC! Here is my alternative solution: bernoulli n = 4 -}

Die Ergebnisse der vierten Woche

| Top 30 der Woche | |||

|---|---|---|---|

| Platz | Wettbewerber*in | Performance | Punkte |

| 1. | Simon Stieger | 2.10 | 30 |

| 2. | Anton Baumann | 2.9 | 29 |

| 3. | Marco Haucke | 2.8 | 28 |

| 4. | Martin Fink | 2.7 | 27 |

| 5. | Bilel Ghorbel | 2.6 | 26 |

| 6. | Almo Sutedjo | 2.5 | 25 |

| 7. | Tobias Hanl | 2.4 | 24 |

| 8. | Maisa Ben Salah | 2.3 | 23 |

| 9. | Nikolaos Sotirakis | 2.2 | 22 |

| 10. | Leon Julius Zamel | 2.1 | 21 |

| 11. | Yi He | 2.0 | 20 |

| Jonas Maximilian Weigand | 2.0 | 20 | |

| Nico Greger | 2.0 | 20 | |

| 14. | Daniel Strauß | 1.0 | 10 |

| Johannes Bernhard Neubrand | 1.0 | 10 | |

| Christoph Wen | 1.0 | 10 | |

| Damian Depaoli | 1.0 | 10 | |

| Justus Schönborn | 1.0 | 10 | |

| Paul Andrei Sava | 1.0 | 10 | |

| Qingyu Li | 1.0 | 10 | |

| Jonas Fill | 1.0 | 10 | |

| Björn Henrichsen | 1.0 | 10 | |

| Dominik Glöß | 1.0 | 10 | |

| Steven Lehmann | 1.0 | 10 | |

| Xianer Chen | 1.0 | 10 | |

| Timur Eke | 1.0 | 10 | |

| Anna Franziska Horne | 1.0 | 10 | |

| Kevin Schneider | 1.0 | 10 | |

| Denis Belendir | 1.0 | 10 | |

| Margaryta Olenchuk | 1.0 | 10 | |

This week, we received an encouraging 96 (correct) submissions, teaching us to always award a homework point for the Wettbewerbsaufgabe. To whittle these down to a Top 30, we spell checked 5 misspelled words of 5-10 characters against a dictionary of the 100 most common english words. Even unoptimised solutions should be able to do this in a fraction of a second. Setting the cutoff at 2 s leaves us with 30 submissions. As usual, we also have some entries hors compétition: A hyperoptimized solution by the MC Sr, an implementation based on BK-trees by co-MC Rädle (the MC Jr Sr?), and one using a simple but highly effective optimization by co-MC Stevens (the MC Jr Jr). More on these later.

Similar to last week, we will proceed in stages to distinguish submissions with different asymptotic running times. In the first phase, we simply ran the same test as before but increased the size of the dictionary to 1000 words:

| Performance evaluation: 5 misspellings, dictionary size 1000 | ||

|---|---|---|

| Name | Time | |

| MC Sr | 0.03 s | |

| Simon Stieger | 0.03 s | |

| Anton Baumann | 0.03 s | |

| Marco Haucke | 0.04 s | |

| Bilel Ghorbel | 0.04 s | |

| Nikolaos Sotirakis | 0.04 s | |

| Tobias Hanl | 0.04 s | |

| Maisa Ben Salah | 0.05 s | |

| Almo Sutedjo | 0.05 s | |

| Leon Julius Zamel | 0.05 s | |

| Martin Fink | 0.06 s | |

| BK Tree (co-MC Rädle) | 0.06 s | |

| Yi He | 0.09 s | |

| Early Exit (co-MC Stevens) | 0.11 s | |

| Jonas Maximilian Weigand | 0.19 s | |

| Nico Greger | 0.23 s | |

| Daniel Strauß | > 5 s | |

| Johannes Bernhard Neubrand | > 5 s | |

| Christoph Wen | > 5 s | |

| Damian Depaoli | > 5 s | |

| Justus Schönborn | > 5 s | |

| Paul Andrei Sava | > 5 s | |

| Qingyu Li | > 5 s | |

| Jonas Fill | > 5 s | |

| Björn Henrichsen | > 5 s | |

| Dominik Glöß | > 5 s | |

| Steven Lehmann | > 5 s | |

| Xianer Chen | > 5 s | |

| Timur Eke | > 5 s | |

| Anna Franziska Horne | > 5 s | |

| Kevin Schneider | > 5 s | |

| Denis Belendir | > 5 s | |

| Margaryta Olenchuk | > 5 s | |

Despite the generous time limit of 5 s, this already eliminates a whopping 17 contestants. All of them implemented lightly optimized versions of the recurrence on the problem sheet, which leads to a comfortably exponential running time. Here is a relatively clean example of such a solution, using only material that has been covered in the lecture:

editDistance :: Eq a => [a] -> [a] -> Int editDistance a b = dab (length a - 1) (length b - 1) where m = (length a) + (length b) dab i j = minimum [if i < 0 && j < 0 then 0 else m, if i >= 0 then 1 + dab (i-1) j else m, if j >= 0 then 1 + dab i (j-1) else m, if i >= 0 && j >= 0 && a!!i == b!!j then dab (i-1) (j-1) else m, if i >= 0 && j >= 0 && a!!i /= b!!j then 1 + dab (i-1) (j-1) else m, if i >= 1 && j >= 1 && a!!i == b!!(j-1) && a!!(i-1) == b!!j then 1 + dab (i-2) (j-2) else m ] spellCorrect :: [String] -> [String] -> [[String]] spellCorrect d [] = [] spellCorrect d (x:xs) = spellCorrectWord d x : spellCorrect d xs spellCorrectWord :: [String] -> String -> [String] spellCorrectWord d s = let dists = map (\ s' -> (s',editDistance s s')) d minDist = minimum (map snd dists) in map fst $ filter (\ (_,d) -> d == minDist) dists

There are two obvious approaches to improving the performance of spellCorrect: Make editDistance faster; or avoid running editDistance for every word in the dictionary. A good example of the latter approach can be found in Martin Fink's submission. While iterating through the dictionary, his correct function keeps track of the lowest edit distance found so far. If the difference in lengths between the search word and the next dictionary word is larger than this distance, then there is no need to compute the edit distance for this pair, since abs (length x - length y) is a lower bound on editDistance x y.

Co-MC Stevens takes this approach to the extreme. He passes the minimum distance found so far to his editDistance function, and simply aborts the computation of the distance if it can no longer be below this threshold. As we shall see, this is highly effective if there are words with low edit distance to the search string in the dictionary; which you would expect to be the case in a realistic spell checking scenario.

These optimizations do not improve the asymptotic worst case running time of the algorithm, however. In order to achieve a polynomial running time, all solutions that survived this round employ dynamic programming. That is, they avoid recomputing distances by storing intermediate values in lists or arrays. A somewhat readable example of this approach comes courtesy of Herr Zamel:

editDistance :: Eq a => [a] -> [a] -> Int editDistance xs ys = mem !! (length xs) !! (length ys) where mem = [[ed (i,j) | j <- [0..(length ys)]] | i <- [0..(length xs)]] ed (i,j) = minimum $ filter (>=0) [c1 i j, c2 i j, c3 i j, c4 i j, c5 i j] c1 i j = if i == 0 && j == 0 then 0 else -1 c2 i j = if i > 0 then (mem !! (i-1) !! j) + 1 else -1 c3 i j = if j > 0 then (mem !! i !! (j-1)) + 1 else -1 c4 i j = if i > 0 && j > 0 then if xs !! (i-1) == ys !! (j-1) then (mem !! (i-1) !! (j-1)) else (mem !! (i-1) !! (j-1)) + 1 else -1 c5 i j = if i > 1 && j > 1 && xs !! (i-1) == ys !! (j-2) && xs !! (i-2) == ys !! (j-1) then (mem !! (i-2) !! (j-2)) + 1 else -1

Here, the results of individual calls to ed are stored in the table mem. This requires very little extra implementation effort compared to the naive solution but brings with it a huge improvement in performance.

For the next stage we move to a different corpus, containing misspellings made by British and American students. As a first test, we picked 100 misspellings at random and ran them against the entire dictionary of 6137 correctly spelled words:

| Performance evaluation: 100 misspellings, dictionary size 6137 | ||

|---|---|---|

| Name | Time | |

| MC Sr | 1.44 s | |

| Simon Stieger | 1.84 s | |

| Anton Baumann | 2.19 s | |

| Early Exit (co-MC Stevens) | 2.23 s | |

| BK-tree (co-MC Rädle) | 2.44 s | |

| Marco Haucke | 3.80 s | |

| Nikolaos Sotirakis | 4.75 s | |

| Bilel Ghorbel | 4.95 s | |

| Tobias Hanl | 5.13 s | |

| Almo Sutedjo | 5.33 s | |

| Maisa Ben Salah | 5.44 s | |

| Martin Fink | 6.36 s | |

| Leon Julius Zamel | 6.61 s | |

| Yi He | > 10 s | |

| Jonas Maximilian Weigand | > 10 s | |

| Nico Greger | > 10 s | |

The time limit of 10 s eliminates 3 further students, leaving us with a Top 10. The eliminated students implemented dynamic programming but construct and update their tables in very inefficient ways, mainly by using excessive amounts of ++ operations, which are linear in their first argument.

In order to get some separation between the remaining submissions, we increase the number of misspellings to 3000:

| Performance evaluation: 3000 misspellings, dictionary size 6137 | ||

|---|---|---|

| Name | Time | |

| MC Sr | 26.72 s | |

| Simon Stieger | 32.99 s | |

| BK-tree (co-MC Rädle) | 36.38 s | |

| Anton Baumann | 41.29 s | |

| Early Exit (co-MC Stevens) | 41.83 s | |

| Marco Haucke | 91.67 s | |

| Bilel Ghorbel | 104.79 s | |

| Nikolaos Sotirakis | 116.16 s | |

| Martin Fink | 121.47 s | |

| Maisa Ben Salah | 123.43 s | |

| Tobias Hanl | 125.93 s | |

| Almo Sutedjo | 130.01 s | |

| Leon Julius Zamel | 151.81 s | |

Co-MC Stevens' submission is still up there, even though it uses a completely unmemoized implementation of the edit distance. This is because the words in this dictionary are quite short, and it is therefore likely that a pair with low edit distance will be found relatively quickly. Once such a pair has been found, his early exit optimization is able to discard most words after looking at a few letters.

This drastically changes, however, once we begin to look at longer words where a pair with low edit distance may not be found easily. In order to find a suitable test case that shows this, English is no longer sufficiently powerful. Only by switching to good old Deutsch can we make use of words like Straßenentwässerungsinvestitionskostenschuldendienstumlage or Rinderkennzeichnungsfleischetikettierungsüberwachungsaufgabenübertragungsgesetz.

For our last test case, we used a dictionary derived from the duden corpus, but discarded all those boring words of fewer than 15 characters. This leaves us with 94061 words, which we tested against 20 Bandwurmwörter of 32 - 81 characters. This might seem pretty brutal, but some submissions were able to handle this test quite well:

| Performance evaluation: 20 Bandwurmwörter, dictionary size 94061 | ||

|---|---|---|

| Name | Time | |

| MC Sr | 16.81 s | |

| Simon Stieger | 21.90 s | |

| Anton Baumann | 22.18 s | |

| Martin Fink | 112.68 s | |

| BK-tree (co-MC Rädle) | 133.04 s | |

| Marco Haucke | 203.91 s | |

| Almo Sutedjo | 218.58 s | |

| Tobias Hanl | 294.11 s | |

| Bilel Ghorbel | 309.47 s | |

| Maisa Ben Salah | 355.84 s | |

| Leon Julius Zamel | 365.17 s | |

| Nikolaos Sotirakis | 583.92 s | |

| Early Exit (co-MC Stevens) | > 600 s | |

Notably, the Herren Stieger and Baumann were able to stay quite close to the MC Sr's contribution. Both implemented dynamic programming using arrays and added the previously described optimization that avoids computing the edit distance of pairs whose length differential is sufficiently large. Herr Fink also uses this optimization, but his choice of lists instead of arrays slows him down somewhat. Herr Stieger additionally implemented the early exit optimization.

To get a little bit of extra performance, the MC Sr strips common prefixes and suffixes before computing the edit distance and uses linear space in his editDistance implementation by keeping only two rows of the table. He also implemented parallelism, but our tests were run on a single thread. Otherwise, his solution is quite similar to Herr Stieger's.

Another avenue for optimization is preprocessing the dictionary, although none of the student submissions took this opportunity. Co-MC Rädle, however, stores his dictionary as a BK-tree, which is a tree where the edit distance between a node and its k-th child (if it exists) is exactly k. When running a search word against this tree representation of the dictionary, one uses the fact that the edit distance is a metric and thus fulfills the triangle inequality. We can search for words with distance of at most d from the search word as follows: If the distance between the root and the search word is n, then by the triangle equality, the distance between the k-th child and the search words is at least n-k. We therefore consider only children in the range [d-n..d+n] in our search, because children outside this range have a distance of at least n-(d+n+1) = d+1 to the search word.

This means that we do not need to compare every word in the dictionary against the search word; empirical results suggest that on an average search ~20% of the dictionary is considered. If a word is a possible candidate, however, the edit distance needs to be computed in full in order to determine which children need to be included in the search; no early exit optimization is possible. Additionally, we cannot discard words based on their length, because it is not sufficient to know that the edit distance is larger than a certain value -- we need to know the exact edit distance to proceed. This is why the BK-tree based implementation does well in the benchmarks, but is not able to compete with the very best solutions.

What have we learned this week?

- We should always award homework points for the Wettbewerbsaufgabe, lest we get fewer and fewer submissions.

- Wettbewerbspunkte need not be hard to come by; lightly optimized solutions scored 10 points this week.

- Dynamic programming can be simple to implement and highly effective.

- Computing something quickly is still slower than not computing it at all. (cf. co-MC Stevens)

- Sometimes different types of optimization can get in the way of each other, as is the case with BK-trees.

- The students are coming closer than ever to besting the venerable MC Sr.

Die Ergebnisse der fünften Woche

*cough cough, sniff, inhale* yes, this is the MC Jr speaking. Little did he know when leaving his beautiful office that he shall not return for a 3 days. The reason for his long absence are the long paths through the intriguing nature surrounding the Garchosibirsk campus that the 67 delightful submissions for week 5 were computing. The MC Jr owes you a great debt of gratitude for this splendid experience.

Moreover, he received obligatory, highly optimised, linear time submissions by the MC Sr and the tutor that has too much time on his hands: Herr Großer.

As a matter of fact, the longest path problem is quite computationally expensive (NP-hard) in general, but for DAGs, like the Garchosibirsk campus, the problem is rather easy to solve (linear time).

Just like his more senior fellow, the MC Jr decided to conduct several phases of elimination.

So let's get down to business to defeat the Huns 🎶 to trenn die Spreu vom Weizen 🌾.

The first pre-processing step, round -1 (minus one), consists of eliminating all submissions that do not pass the tests on the server.

At this phase, we already have to say goodbye to one of the top ranked participants, Herr Stieger, who did not submit a working solution.

Just by chance, the MC Jr actually discovered that Herr Stieger submitted a solution using the {-MCCOMMENT...-} feature, but

nevertheless, the provided solution uses the unsafe Data.Array.ST module that does not compile on the server.

We are sorry Herr Stieger, but same rules for everyone:

- The submission needs to pass the tests on the server

- An optional, alternative solution provided in the comments needs to at least compile on the server

Herr Stieger, by the way, used the topological order of the graph – an approach that many top-ranking FPETs fancied as we will see later on.

Edit 26.12.2019: After having a talk with the MC Sr, it was decided to change Herr Stieger's submission to use the safe Data.Array.ST.Safe import and make his submission part of the competition. The ranking was updated accordingly.

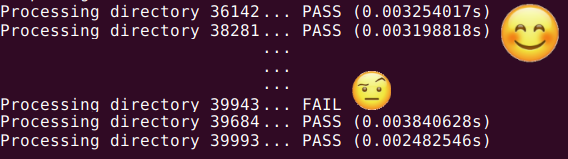

In round 0, the MC Jr decided to perform a very basic sanity check by running all submissions against the graph provided on the homework sheet:

Seeing all the tests pass made the MC Jr happier than a box of chocolate. But then, out of nowhere, the scary word appears: FAIL.

With great horror, he realised that one of his FPETs (Functional Programmers of Excellence in Training) failed the most simple test but still passed the tests on the submission server! He decided to have a look at the solution provided by the lucky Oleksandr Golovnya:

longestPath g t = head [snd x | x <- topsort [(v,0)| v <- fst g] (snd g), fst x == t] topsort [] e = [] topsort (v:vs) e = [v] ++ topsort [(fst x, if elem (fst v,fst x) e && 1+snd v > snd x then 1 + snd v else snd x) | x <- vs] e

First things first: Let's clean up the code.

longestPath (vs,es) t = let ts = topsort [(v,0) | v <- vs] es in head [c | (t',c) <- ts, t' == t] topsort [] es = [] topsort ((s,c):vs) es = (s,c) : topsort [(s, if elem (s,t) es then maximum (1 + c, c') else c') | (t,c') <- vs] es

That's better. Here are a few things to keep in mind:

- Split long expressions into simpler subexpressions to increase readability (e.g. let

tsdenote the result of thetopSortcall) - Use pattern matching on tuples to avoid

fstandsndcalls - Do not write

[x] ++ xsto concatenate a single element to a list; just usex : xs

Anyway, putting all the stylistic things aside, the topsort function sadly does not topologically order at all but merely recurses on all vertices in given order (which could be anything!) and updates the distances to all neighbours with higher index, completely neglecting the fact that there might be edges to vertices with a lower index.

How this fails can easily be seen when we use Herr Golovnya's submission on our small example containing and edge from node 4 to 3:

longestPath = ([1..5] , [(1 ,2) ,(2 ,3) ,(2 ,4) ,(3 ,5) ,(1 ,4) ,(4 ,3) ,(4 ,5)]) 5 = 3

The MC Jr sadly made things too easy on the test server and only generated graphs not containing such edges. So, you got lucky this time and well-deserve your homework point Herr Golovnya, but you are sadly out of the competition. Remaining FPETs: 66 + 2

Next, the MC Jr started elimination round number 1: checking for cheeky FPETs that just assumed that the MC Jr's stroll will always start at vertex number 1.

Taking the following simple graph reveals the sloppy instruction readers:

A long list of FAILs were printed on his GNU terminal of trust. 19 (that's almost a third of all submissions; #quickmaths) FPETs tried to trick the MC Jr.

Relentlessly, each and every one of them will be eliminated – the MC Jr will start his walks wherevere he wishes to!

Again, we have to say goodbye to some top-tier candidates at this stage, among others Frau Chernikova, Herr Soennecken, and Herr Haucke.

Just like Herr Stieger, they made use of a topological ordering algorithm.

The latter even hoped for some small-scale optimisations inlining his updateMap function and left the following comment:

Inlining this is akin to thinking that putting spoilers on your car adds horsepower, but now it looks a little more as if I knew what I was doing

{-# INLINE updateMap #-}

...

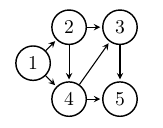

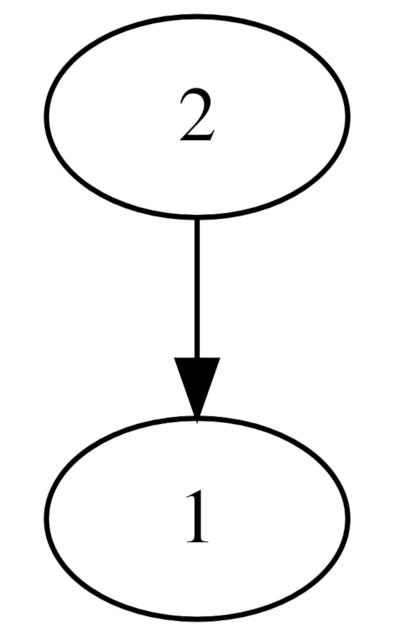

The MC Jr agrees and also suggests that instead of investing into small-scale optimisations, one should first prove one's implementaiton to be correct – in the best case, using Isabelle (#Dauerwerbesendung). Some other submissions got really confused with computer science and mathematical lingo, calling their topoligcal ordering a topology. The MC Jr hence kindly provides the following pictures that should clear up all confusion.

|

|

Finally, we get our hands feet dirty and start our first walk. A walk along 32 vertices. A walk so short, the MC Jr was barely able to leave the Magistrale.

A small number of 5 FPETs, however, took longer than a fifth of a second (an ETERNITY!) to compute the distance. Sad! Remaining FPETs: 42 + 2

And so the same game repeats: the MC Jr increases the number of nodes to 40 (a quick stroll to the Interimsgebäude), eliminating another 27 FPETs (15 + 2 remaining), and then, he cannot quite remember the exact number, but he guarantees something between 60 and 200 (a brisk walk to the U-Bahn), to eliminate a further 10 FPETs (5 + 2 remaining) that sadly did not quite make it into the group of big girls and boys.

Before continuing with the quarter-finals, the MC Jr decided to time the submissions kicked out thus far. After all, it is almost Christmas time and he wants to hand out some gifts aka. precious ranking points. Some rounds of competition on the Interimsgebäude graph led to the following ranking – congrats to all the winners so far 🎉:

| Result quarter-finalists | ||

|---|---|---|

| Rank | FPET | Points |

| 6. | Yi He | 25 |

| 7. | Mokhammad Naanaa | 24 |

| 8. | Matthias Ellerbeck | 23 |

| 9. | Emil Suleymanov | 22 |

| 10. | Pascal Ginter | 21 |

| 11. | Kaan Uctum | 20 |

| 12. | Sebastian Galindez Tapia | 19 |

| 13. | Tal Zwick | 18 |

| 14. | Tobias Hanl | 17 |

| 15. | Ricardo Vera Jacob | 16 |

| 16. | Yevgeniy Cherkashyn | 15 |

| 17. | Omar Eldeeb | 14 |

| 18. | Ernst Pappenheim | 13 |

| 19. | Leon Beckmann | 12 |

| 20. | Mira Trouvain | 11 |

| 21. | Erik Heger | 10 |

| 22. | Hui Cheng | 9 |

| 23. | Cosmin Aprodu | 8 |

| 24. | Björn Henrichsen | 7 |

| 25. | Daniel Anton Karl von Kirschten | 6 |

| 26. | Jonas Hübotter | 5 |

| 27. | Kevin Burton | 4 |

| 28. | Ali Mokni | 3 |

| 29. | Adrian Marquis | 2 |

| 30. | Heidi Albarazi | 1 |

Almost all quarter-finalists made use of basically the same idea: In order to compute the longest path from the source s to some node v, it suffices to recursively calculate the longest paths to v starting from v.

As, by assumption, our graph is a DAG and s is its only source node, the result of this computation will be the longest path from s to v.

In other words, if l(v) denotes the longest path from s to v, then l(v) satisfies the following recurrence relation:

l(v)=max({0} ∪ {l(w) | (w,v) ∈ E}).

This backwards traversal also has the nice effect that one does not need to explicitly search for the starting node s.

The MC Jr decided to show Herr Pappenheim's crisp one-line solution as a reference for this approach:

longestPath g@(vs, es) v = maximum (0:[1+(longestPath g x) | (x, a) <- es, a == v])

Note how Herr Pappenheim made use of the great as-patterns in order to inspect the components of g while still having the possibility to refer to g in its totality.

If the competition would have been about style and elegance, Herr Pappenheim surely would have won! ✨

Okay, now let's get real. For the semifinals, the MC Jr drew a graph from his office to the Maschinenwesen building. It's a dense graph containing 639 vertices, including the soft-opened Galileo (according to reliable sources, the hard opening is planned at about the same time as the opening of the BER). Running the semifinalist's submissions for one second reveals the four finalists. I am afraid, but the following FPETs got eliminated due to a timeout:

| Rank | FPET | Time | Points |

|---|---|---|---|

| 3. | Xiaolin Ma | 2.1s | 28 |

| 4. | Martin Achenbach | 6.22s | 27 |

| 5. | Le Quan Nguyen | TIMEOUT (>20s) | 26 |

An optimisation of the previously explained backwards traversal approach can be obtained by means of a tail-recursive implementation using an accumulator to store all so-far visited nodes and the so-far computed distance. This approach has been followed by Herr Nguyen, putting him on place 5 for this week. Congrats!

longestPath (node, edge) t = go [t] 0 where go [] n = n-1 go xs n = go (nub $ concat [ [w | (w,u)<-edge, u == x] | x<-xs]) (n+1)

Herr Ma and Herr Achenbach, however, made use of a topological order approach (that will be explained shortly), winning 28 and 27 points respectively. Congratulations! 🥳

For the finals, the MC Jr went to great pains and drew the whole Garchosibirsk campus containing a Fantastilliarde – in digits 20.000 – nodes and millions of edges using only a ruler and a compass. At first, he let the finalists compete on the whole dense graph he constructed. He then ran a second round on a very sparse graph that still contained 20.000 nodes but only 40.000 edges. The finalists' approaches can be summarised as follows:

- Herr MC Sr Manuel Eberl and Herr Ghorbel take the optimisation of the backwards traversal approach a step further. Herr Eberl uses an optimised version of

nubfor integers, hash maps instead of lists for quicker lookups, and a memoisation map storing the values of the so-far computed l(v) values. Herr Ghorbel decided to use Arrays and the graph library provided by Haskell instead.

Implementation by the MC Sr-- Faster O(n log n) nub specialised for integers. -- Probably doesn't make a difference but still. intNub :: [Int] -> [Int] intNub = map head . group . sort longestPath :: Graph -> Vertex -> Int longestPath (vs, es) v = longestPath' v where succMap = HS.map intNub $ HS.fromListWith (++) [(v, [u]) | (u, v) <- es] succs v = HS.lookupDefault [] v succMap m = HS.fromList [(v, longestPath'' v) | v <- vs] longestPath' v = fromJust (HS.lookup v m) longestPath'' v = maximum (0 : map ((+1) . longestPath') (succs v))

Implementation by Herr Ghorbel. It is not quite clear to the MC Jr whether using the topological sorting to construct the indices (maxi::[Int]->Int maxi [] = -1 maxi xs = maximum xs longestPath :: Graph-> Vertex -> Int longestPath (v,e) s = f s where cmp (a,_) (b,_) = compare a b min_v = minimum v max_v = maximum v graph = buildG (min_v,max_v) e tgraph = transposeG graph topo = topSort graph iTable = listArray (min_v,max_v) (map snd (sortBy cmp (zip topo [min_v..max_v]))) f x = 1 + maxi [memo!(iTable!nxt)|nxt<-(tgraph ! x)] memo = listArray (min_v,max_v) (map f topo)

iTable) for the memoisation mapmemohas any benefit. A quick experiment did not show any major performance difference when replacing the last four lines by the following two:

f x = 1 + maxi [memo!nxt|nxt<-(tgraph ! x)] memo = listArray (min_v,max_v) (map f [min_v..max_v])

- Herr Großer and Herr Sutedjo used a varient of the following topological order approach:

- Create a topological order of the graph. This can be done, for example, by using a depth-first-search algorithm, or by using the following, more visually appealing, strategy: select all nodes without incoming edges, remove them from the graph (including their outgoing edges), and store them at the front of the ordering. Then repeat until you are left with nothing but cold void.

Topological order algorithm (source) - Process all vertices in topological order. For every vertex being processed, update the distances to its neighbours using the distance of the current vertex.

- Create a topological order of the graph. This can be done, for example, by using a depth-first-search algorithm, or by using the following, more visually appealing, strategy: select all nodes without incoming edges, remove them from the graph (including their outgoing edges), and store them at the front of the ordering. Then repeat until you are left with nothing but cold void.

It was a close race, but the final results are *can I please get some drums rolling*

🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁🥁| Results finalists | ||||

|---|---|---|---|---|

| Rank | FPET | Time dense graph | Time sparse graph | Points |

| 1. | Bilel Ghorbel | 3.65s | 0.47s | 30 |

| (out of competition) 2. | Markus Großer | 3.93s | 0.5s | |

| 3. | Almo Sutedjo | 3.99s | 0.52s | 29 |

| (out of competition) 4. | Herr MC Sr Manuel Eberl | 4.09s | 0.54s | |

Note: As you can clearly see in this beautifully formatted table, the MC Jr has a very strong web-development background.

So what have we learned this week?

- Make sure you submit something that passes the tests on the server.

- Passing the tests on the server does not guarantee correctness of the submission. Sorry Herr Golovnya and all "vertex number 1"-starters! But no need to worry. There's nothing lost and many points still to gain in upcoming competitions!

- Putting spoilers on one's car only gets you style points in Need for Speed but not the legendary FPV competition.

- A cow is a sphere. In symbols: 🐄 = 🌐

- Elegant and short implementations can get you very far

- Complicated implementations might get you even further *looking at Herr Großer*

- Using optimised libraries and cleverness gets you the furthest!

- Garchosibirsk is a mysterious, huge place.

- The MC Jr is a huge Mulan and Forrest Gump fan.

- Just to say it again: writing competition entries takes forever; it's 3am, good night! *drops mic*

Lastly, here's the combined result table for week 5. If you have any complaints, contact the MC Jr – he still is a MC in training and total correctness is hence not guaranteed.

Edit 26.12.2019: Herr Stieger's submission (modifed using safe array imports) has been added to the competition, scoring a bronze medal. Congratulations! The MC Jr decided to deduct 5 points for his unsafe imports that manually had to be changed and, as a matter of fairness, keep everyone else's points the same.

| Results week 5 | ||

|---|---|---|

| Rank | FPET | Points |

| 1. | Bilel Ghorbel | 30 |

| 2. | Almo Sutedjo | 29 |

| 2. | Simon Stieger | 28-5=23 |

| 3. | Xiaolin Ma | 28 |

| 4. | Martin Achenbach | 27 |

| 5. | Le Quan Nguyen | 26 |

| 6. | Yi He | 25 |

| 7. | Mokhammad Naanaa | 24 |

| 8. | Matthias Ellerbeck | 23 |

| 9. | Emil Suleymanov | 22 |

| 10. | Pascal Ginter | 21 |

| 11. | Kaan Uctum | 20 |

| 12. | Sebastian Galindez Tapia | 19 |

| 13. | Tal Zwick | 18 |

| 14. | Tobias Hanl | 17 |

| 15. | Ricardo Vera Jacob | 16 |

| 16. | Yevgeniy Cherkashyn | 15 |

| 17. | Omar Eldeeb | 14 |

| 18. | Ernst Pappenheim | 13 |

| 19. | Leon Beckmann | 12 |

| 20. | Mira Trouvain | 11 |

| 21. | Erik Heger | 10 |

| 22. | Hui Cheng | 9 |

| 23. | Cosmin Aprodu | 8 |

| 24. | Björn Henrichsen | 7 |

| 25. | Daniel Anton Karl von Kirschten | 6 |

| 26. | Jonas Hübotter | 5 |

| 27. | Kevin Burton | 4 |

| 28. | Ali Mokni | 3 |

| 29. | Adrian Marquis | 2 |

| 30. | Heidi Albarazi | 1 |

Die Ergebnisse der sechsten Woche

We at the Übungsleitung like to change things up and therefore the honourable task of writing this week's competition blog entry was bestowed upon the MC Jr Jr, Lukas Stevens.

In this week we received a grand total of 109 submissions that passed the tests on the server and had Wettbewerbstags.

As is tradition, some students managed to place the tags incorrectly which the MC Jr Jr generously fixed for them.

Additionally, the hacky lovingly handcrafted tool to evaluate the Wettbewerbseinreichungen is not nearly as sophisticated as the submission system; due to this Umstand, the tool breaks if a student omits the type annotations.

The MC Jr Jr embarked on a quest to fix these type annotations which was about as tedious as beating Pokémon without taking a single hit (this might be a slight hyperbole).

Tedium aside, the MC Jr Jr was delighted to see a new strategy emerge that allows students to eliminate other competitors.

This strategy, which we will discuss further down, forced one unlucky student to perform a Pofalla-Wende and thus we are left with 108 submissions.

Originally, this week's IBM Award for Software of Questionable Quality would have gone to a solution with 153 tokens; however, the MC Jr Jr noticed that the submission included the example prime program inside the tags upon closer inspection. This confirmed the suspicion that not even a very creative student could overcomplicate the task by that much. Instead, the award is granted to the solution of another student which is layed out in all its glory below.

t31 (a,b,c) = a t32 (a,b,c) = b t33 (a,b,c) = c traceFractran :: [Rational] -> Integer -> [Integer] traceFractran rs n | t31 aux2 = (t32 aux2) : (traceFractran rs (numerator $ t33 aux2)) | otherwise = [n] where aux2 = aux rs n aux [] n = (False, 0, 0%1) aux (r:rs) n = if denominator (n%1 * r) == 1 then (True, n, n%1 * r) else aux rs n

Apparently, the student in question is not afraid of loosing a billion dollars and implements a tagged tuple that looks suspiciously like a null-reference (the first component being a flag that indicates whether the tuple is null).

This could be solved more elegantly using the Maybe type, but as Maybe hadn't been introduced in the lecture at that point the student may be forgiven in this instance.

Nevertheless, the model solution by the semi-venerable MC Jr Sr only uses material from the lecture while achieving a reasonable degree of conciseness.

With 49 tokens, this solution is only 2 tokens short of being in the top 30 and this without any serious effort to obfuscate optimise for number of tokens.

traceFractran fs n = let prods = [numerator (n%1 * f) | f <- fs, denominator (n%1 * f) == 1] in if null prods then [n] else n : traceFractran fs (head prods)

The following submission by Herr Tal Zwick illustrates that a few improvements to the model solution are sufficient to place in the top 30:

- Use

whereinstead oflet ... in. - The infix notation

`traceFractran`may allow you to omit parentheses. - In Haskell,

ifis an expression so it can be used (almost) anywhere.

Achieving rank 16 in the Wettbewerb doesn't require arcane Haskell knowledge after all!

traceFractran fs n = n:if null nfs then nfs else fs `traceFractran` head nfs where ni = fromIntegral n nfs = [numerator $ f*ni | f <- fs, 1 == denominator (f*ni)]

Instead of optimising for tokens, Herr Andreas Resch unintentionally employed a novel technique to eliminate other competitors: Herr Resch notified the MC Jr Jr that his solution passes the testcases on the server despite being incorrect. Consequently, the MC Jr Jr came up with additional tests to determine which students tried to gain a competitive advantage through a disgraceful disregard for the specification. If not for those additional tests, the winner of this week's competition would have been Herr Peter Wegmann. He provided the following implementation with 29 tokens that is as elegant as it is wrong.

traceFractran xs y = y : concat [traceFractran xs $ numerator $ x * fromIntegral y | x<-xs, y `mod` denominator x == 0]

Here, traceFractran is called recursively every time the we get an integer as the product of a rational in the list xs and the accumulator y.

This gives erroneous results as soon we have more than one such product.

As Herr Resch was aware of this issue, he cleverly provided an alternative solution, which keeps him in the competition ganz im Gegenteil zu Herr Wegmann who is promptly eliminated.

The downside is that the alternative solution has 40 instead of the original 30 tokens which puts him in tenth place in the end.

The submission of one other competitor, namely Herr Emil Suleymanov, had the same bug.

Fortunately, his submission contained a simpler and also correcter version of traceFractran.

The MC Jr Jr has doubts that Herr Suleymanov knowingly circumvented the specification but he decided to let Gnade walten and keep him in the race with the latter version.

Nevertheless, this brings him from 29 up to 37 tokens thus placing him firmly on rank seven.

The takeaway is that this tactic should be applied judiciously lest you eliminate yourself from the top 30.

All of the above implementations are quite basic and only use material from the lecture.

Of course there are some people who just can't keep it simple like Herr Johannes Neubrand.

Not only does he use the constructor (:%), which not even a Sith would tell you about, to match on Rationals, he also just learnt about all kinds of monadic operators.

Such operators are used liberally throughout his implementation, sometimes even to the detriment of the token count (as seen in the implementation of integerProduct).

It shows that we teach him well because he is becoming a true Haskell programmer who values beauty over everything else.

The solution is actually (kind of) straightforward:

integerProductonly returns the result of the multiplication of its arguments if that result is an integer. Otherwise it returnsNothing.concatMap (traceFractran fs) rsconcatenates the lists that result from applyingtraceFractran fsto each element in rs.- Here,

msumreturns the first element in a list that isJust xandNothingotherwise. - Finally,

integerProduct n <$> fsis equivalent tomap (integerProduct n) fs.

integerProduct :: Integer -> Rational -> Maybe Integer integerProduct x f = n <$ guard (d == 1) where n :% d = fromIntegral x * f -- Alternative implementation of integerProduct by the MC Jr Jr integerProduct x f = case fromIntegral x * f of n :% 1 -> Just n _ -> Nothing traceFractran :: [Rational] -> Integer -> [Integer] traceFractran fs n = n : -- we were definitely able to reach this one ( concatMap (traceFractran fs) -- because: instance Foldable (Maybe a) $ msum -- first Just or Nothing $ integerProduct n <$> fs )

Further interesting optimisation were done with monadic operators, e.g. Herr Haucke used mempty instead of [].

Nevertheless, to get near the top of the leaderboard, monadic operators were not necessary as the submissions of Herr Martin Fink and Herr Simon Stieger illustrate.

Both of them achieved rank 2 by essentially providing a correct version of Herr Wegmanns solution with 33 tokens.

The only significant adjustment is to use take 1 such that only the first product that results in an integer is considered in each step.

Thus, the code loses little of its elegance and still only uses material from the lecture.

traceFractran rs n = n : concat (take 1 [rs `traceFractran` floor (fromIntegral n * r) | r <- rs, n `mod` denominator r == 0])

With all that said, the best submission by Herr Almo Sutedjo did employ some monadic operators to get down to 30 tokens for which the MC Jr Jr sincerely congratulates him. This is an impressive feat considering that he not only surpassed all the other students but also bested Herr Markus Großer by 1 token whose resources don't seem to be infinite after all. The details of Herr Sutedjos solution are explained below.

- Again, the operator

<$>is used here instead ofmap. - The expression

liftM2 eqInteger truncate numeratorconstructs a function that compares the numerator of a rational numberrwith its truncated form (the integer closest torfrom 0). Thus, the expression returns true if, and only if,ris an integer. fromMaybeunwraps a Maybe and uses the first argument as a default value in case it encountersNothing.findtries to find an element in a list that satisfies the given predicate. This is also the function that Herr Oleksandr Golovnya was looking for. To find just the function you need, hoogle is a helpful tool.

traceFractran rs n = n : fromMaybe [] (traceFractran rs <$> numerator <$> (liftM2 eqInteger truncate numerator) `find` map (fromIntegral n *) rs)

Even though the above solution is impressive, there is still room for improvement: using mempty instead of [] and omitting the parentheses around liftM2 would have landed Herr Sutedjo at a comfortable 27 tokens.

This brings us very close to the ever-unbeatable MC Sr who came up with the following solution that has 26 tokens:

denominator &&& numerator <<< (* fromIntegral n)uses the combinators fromControl.Arrowto construct a function that first multiplies a rational numberrbynand then returns a tuple where the first component is denominator and the second component the numerator ofr * n.- After mapping this function over the list

xs, we find the first numerator whose corresponding denominator is 1 usinglookup 1. - Ultimately,