Quicklinks

- Participation

- Evaluation

- Ranking and Prizes

- Top 30

- Week 1 (updated 26/11/2020)

- Week 2 (updated 09/12/2020)

- Week 3 (updated 17/12/2020)

- Week 4

- Week 5

- Week 6

- Week 7

- Week 8

- FPV-Programming Contest

- Schönheitswettbewerb & Semester Closing (updated 15/02/2021)

The Wettbewerb 🏆

Welcome to the famous FPV-Wettbewerb! The Wettbewerb is a longstanding tradition [1, 2, 3, 4], started by the Master of Competition (MC) emeritus Jasmin Blanchette in 2012. Much time has passed since then, the MC emeritus is now a professor in Amsterdam, and the Wettbewerb a prestigous export hit making its way even to ETH-Zürich [1, 2]. Still, the Wettbewerb progresses more or less as always, mutatis mutandis, and the team of MCs will attempt to keep the old spirit alive. (If you are confused by the style of this blog, the fact that the MC refers to himself in the third person, or the odd mixture of German, English, and occasional other languages – that is also a legacy of the MC emeritus and changing it now would be a serious offence)

Each exercise sheet will be host of a magnificently written competition exercise by Herr MC Sr Eberl, Herr MC Jr Sr Rädle, Herr MC Jr Kappelmann, or Herr MC Jr Jr Stevens.

Participation 📄

You may use any function from the following packages for your solution: base, array, containers, unordered-containers, binary, bytestring, hashable, QuickCheck, text

Your solution will only be taken into consideration for the Wettbewerb if it contains correctly placed {-WETT-}...{-TTEW-} tags. All of the relevant code, including all auxiliary functions used for it must be inside these tags.

Important: By participating in the Wettbewerb, you agree that your name may appear in the ranking on the website. If you do not want to participate, submit your homework without the {-WETT-}...{-TTEW-} tags. Your homework points are not affected by this.

Evaluation ✅

These exercises count just like any other homework, but are also part of the official competition – overseen and marked by the MCs. The grading scheme for these exercises will vary: performance, minimality, or beauty are just some factors the MCs value.

Sometimes, the MC will ask you to minimise the number of tokens of your code. For an explanation of what a token is precisely, see last year’s Wettbewerb website. To count the tokens of some code, you can use the MC emeritus’s token counting program on your own machine. Additionally, the MC Sr graciously allows you to use his convenient online token-counting service.

Caution: both of these token-counting programs take top-level type signatures like f :: Int -> Int into account when counting tokens. The MC, however, does not take them into account. He removes them before counting, and so should you.

However: your submitted file must contain these signatures. They are present in the template; do not delete them.

Ranking and Prizes 🥇📚💸

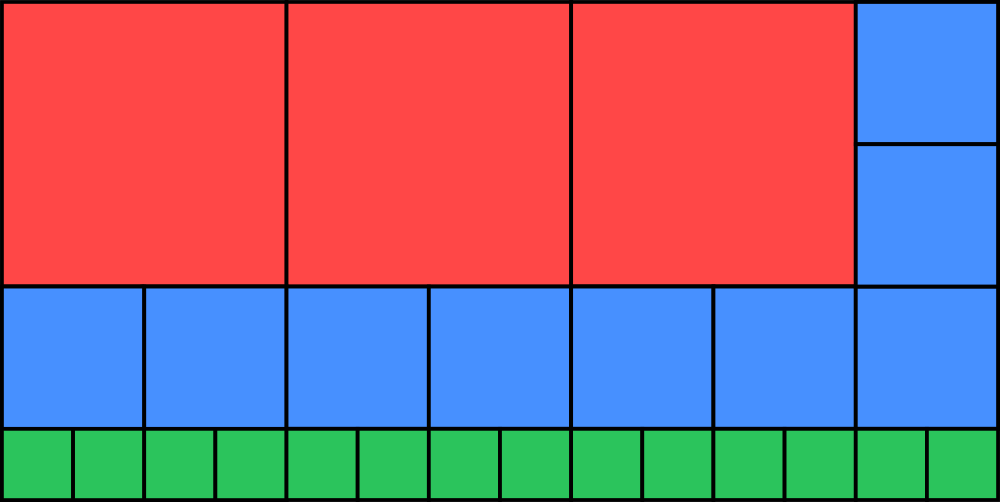

At least biweekly, the top 30 students of the past challenge will be crowned and the top 30 students of the semester updated.

The best 30 solutions will receive points: 30, 29, 28, and so on.

The MCs’s favourite solutions will be cherished and discussed on this website.

At the end of the semester, the best k students will be celebrated with tasteful prizes, kindly

provided by our sponsors, where k is a natural number whose value will be determined when time has come.

Some prizes might also be awarded to outstanding, but competition-avoiding students.

The prizes include

- Invitations to magical gatherings 🧙🧙♂️ (BOB2021)

- Modern scrolls of sorcery 📜 (magnificent programming books)

- Schotter 💶 and quid 💷

Moreover, some of our sponsors will hold special Haskell workshops that you can join 🗣️. Successful participation in one of these workshops can then be traded for a precious grade bonus coin!

Special thanks to our sponsors

Top 30 of the Semester

| Rank | Name | Points |

|---|---|---|

| 🥇 | Florian Hübler | 226 |

| 🥈 | Benjamin Defant | 194 |

| 🥉 | Felix Zehetbauer | 185 |

| 4. | Max Lang | 172 |

| 5. | Sophia Knapp | 153 |

| 6. | Malte Schmitz | 148 |

| 7.* | Simon Longhao Ouyang | 146 |

| 7.* | Severin Schmidmeier | 139 |

| 8.* | Maren Biltzinger | 137 |

| 10. | Luis Bahners | 113 |

| 11. | Robert Imschweiler | 112 |

| 12. | Max Schröder | 110 |

| 13. | Simon Kammermeier | 107 |

| 14. | Tobias Markus | 105 |

| 15. | Julian Pritzi | 83 |

| 16. | Nils Cremer | 82 |

| 17. | Jakob Florian Goes | 81 |

| 18. | Jalil Salamé Messina | 76 |

| Vitus Hofmeier | 76 | |

| 20. | Flavio Principato | 73 |

| Adrian Reuter | 73 | |

| 22. | Paul Hofmeier | 72 |

| 23. | Rafael Brandmaier | 66 |

| 24. | Alexander Steinhauer | 63 |

| 25. | Kilian Mio | 62 |

| 26. | Manuel Pietsch | 61 |

| 27. | JiWoo Hwang | 59 |

| 28. | Patryk Morawski | 57 |

| 29. | Florian Weiser | 56 |

| 30. | Philip Haitzer | 53 |

* Updated on 15.02 due to a missed Schönheitswettbewerb submission. Old ranks were prioritised if affected by this late update.

Week 1 (updated 26/11/2020)

Saluton, karaj konkursuloj! This is your Mastro de la Konkurso speaking. As a reminder, the task this week was to take a number between 0 and 1066-1 and render it in words in the international language, Esperanto. The MC Sr is an avid Esperanto speaker and has been scheming for years to force Esperanto-related tasks on helpless undergraduates. And so his army of undergraduates toiled tirelessly for 10 days in a seemingly impossible attempt to please him. But now it is time to assess the results!

So the MC Sr reorganised his collection of bash scripts and brushed up on his sed knowledge and ploughed through a total of 728 homework submissions. Only 105 of them contained both the {-WETT-} tags and the string "iard" though, so the MC thinks it is safe to say that there are no more than 105 serious attempts to participate in the Wettbewerb (the remaining ones were tested nonetheless, just to be sure – the "iard" is just for the sake of statistics).

Some students who decided not to participate did not handle the cases ≥ 106 in a special way, leading to results such as numberToEo (10^6) == "mil mil". Others included some extra case distinctions and returned some special text, such as ‘Fault: Value too large! Not designed to take part in competition.’ Some of the messages also carried a hint of frustration, such as ‘enough is enough’ and a Polish word best left out of this blog.

One student even wrote a message in Esperanto for numbers ≥ 106: ‘eraro, enigo tro granda’ This student is probably still a komencanto though (or relied on Google Translate): the sentence is supposed to mean ‘error, input too large’, but ‘enigo’ is the act of input itself, not the data that are being input. The correct word for that is ‘enigaĵo’. But do not despair: Nur tiu ne eraras, kiu neniam ion faras!

One solution was submitted by the living meme and legend Johannes Stöhr himself. Yes, tales of Herr Stöhr’s deeds in PGDP and elsewhere have reached even the elderly MC Sr in his ivory tower, even though he did PGDP 10 years ago (long before the era of penguins). Herr Stöhr already completed FPV last year, so the council of MCs is not quite sure what he is still doing here and why he answers all these Piazza questions (he is not being paid for it, to their knowledge), but they know better than to question the motives of mythical beings such as Herr Stöhr and thus simply appreciate the assistance. However, any submissions made by him and other alumni will, of course, be considered außer Konkurrenz.

Unfortunately, Mr Stöhr and 66 of his fellow participants ran afoul of the ever-looming spectre that is correctness:

- Quite a few submissions produced extra spaces before or after words

- A surprising number of submissions were rejected because of simple spelling mistakes, such as ‘miljono’ instead of ‘miliono’. Annoying, but rules are rules!

- Some submissions forgot to pluralise (‘dek milionoj’, not ‘dek miliono’)

- Others just wrote ‘miliono’ instead of ‘unu miliono’

- A few produced very strange results, such as (‘milionoj mil’, ‘whatcent mil’, ‘ljvljz mil’, ‘unu milono nul’)

- One submission was disqualified because it failed to compile.

- Three students correctly printed 1,000 as ‘mil’, but 1,001,000 as ‘unu miliono unu mil’ instead of ‘unu miliono mil’

The last one is debatable because both variants are sensible and there was no example on the exercise sheet that directly contradicts this. However, this question was raised and answered on PiazzaZulip very early, so the MC Sr decided not to accept these solutions. If you didn’t see the Zulip thread: sorry! The MC Sr will try to state the requirements more clearly in the future. But do monitor Zulip regularly for clarifications!

In addition to this, there were a few issues of formal nature:

- One student cheekily added a clause

digitToEo 10 = "dek", with only that clause in the{-WETT-}tags. The MC Sr never said very clearly whether this was allowed or not, so he let it pass. - One student modified

digitToEoa lot, with all the modifications outside the{-WETT-}tags. He was given a stern look and his code was moved where it belonged (inside the tags), which kicked him from place 27 to place 35. - A few other students forgot one of the

{-WETT-}tags. The MC Sr was kind enough to fix this for the first week, but it seems that none of them made it into the Top 30 anyway. Still, be careful about this in the future!

So, let us move on to the solutions that passed. The bottom half of the Top 30 more or less used the same approach as the Musterlösung: some code to deal with numbers less than 1000, some code to compute the word for numbers of the form 1000k, and then some code to deal with numbers larger than 1000 by stripping off chunks of 3 digits. Here is a nice 251 token implementation of this approach by Victor Pacyna

numberToEo :: Integer -> String

numberToEo 0 = "nul"

numberToEo n = number n 0

number :: Integer -> Integer -> String

number n x

| x == 0 = number d0 1 ++ printIf (printIf " " d0 ++ digitToEo m0) m0

| x == 1 = number d0 2 ++ printIf (printIf " " d0 ++ printIf (digitToEo m0) (m0 - 1) ++ "dek") m0

| x == 2 = (if d0 > 0 then number d0 3 else "") ++ printIf (printIf " " d0 ++ printIf (digitToEo m0) (m0 - 1) ++ "cent") m0

| x == 3 = number d000 6 ++ printIf (printIf " " d000 ++ printIf (number m000 0 ++ " ") (m000 - 1) ++ "mil") m000

| x < 64 = number d000 (x + 3) ++ printIf (printIf " " d000 ++ number m000 0 ++ " " ++ ending x ++ printIf "j" (m000 - 1)) m000

| otherwise = ""

where d0 = div n 10

m0 = mod n 10

d000 = div n 1000

m000 = mod n 1000

ending :: Integer -> String

ending x

| x == 6 = "miliono"

| x == 9 = "miliardo"

| mod x 6 == 0 = number (div x 6) 0 ++ "iliono"

| otherwise = number (div (x - 3) 6) 0 ++ "iliardo"

printIf :: String -> Integer -> String

printIf s c = if c > 0 then s else ""Note the clever little function printIf that he employed in order to save tokens on the reoccurring pattern of ‘I want to have the string s but only if the number c is at least this big’. Many contestants used a similar function that takes a Boolean b and returns if b then s else "", but his leads to fewer tokens. In one of the MC Sr’s solutions, he had used a very similar function whenGt :: Integer -> Integer -> String -> String that returns if a > b then s else "".

Noah Dormann wrote the following 176-token single-function recursion that makes use of an integer logarithm function from the library:

numberToEo x

| log == 0 = digitToEo x

| log >= 3 = chooseMiddle log3 (x `div` 10^(log3*3)) ++ if logMLower > 0 then " " ++ numberToEo logMLower else ""

| otherwise = (if logUpper > 1 then digitToEo logUpper else "") ++ chooseMinor log ++ if logLower > 0 then " " ++ numberToEo logLower else ""

where

log = toInteger $integerLogBase 10 x

log3 = log `div` 3

logUpper = x `div` 10^log

logLower = x `mod` 10^log

logMLower = x `mod` 10^(log3*3)

digitToEoM 1 = " m"

digitToEoM 10 = " dek"

digitToEoM x = " " ++ digitToEo x

chooseMiddle 1 logMUpper = if logMUpper > 1 then numberToEo logMUpper ++ " mil" else "mil"

chooseMiddle log3 logMUpper = numberToEo logMUpper ++ digitToEoM (log3 `div` 2) ++ (if even log3 then "iliono" else "iliardo") ++ if logMUpper > 1 then "j" else ""

chooseMinor 2 = "cent"

chooseMinor 1 = "dek"Robert Imschweiler (172 tokens) has the following nice and clean solution:

powerTokenTuples = zip

[10 ^ x | x <- [1,2] ++ [3,6..66]]

(

["dek", "cent", "mil", "miliono", "miliardo"]

++ [ numberToEo x ++ y | x <- [2..10], y <- ["iliono", "iliardo"]]

)

numberToEo :: Integer -> String

numberToEo n

| n < 10 = digitToEo n

| otherwise = numberToEoDetail n x y

where (x, y) = last (filter (\(x,y) -> x <= n) powerTokenTuples)

numberToEoDetail :: Integer -> Integer -> String -> String

numberToEoDetail n divisor token =

(if division > 1 || divisor > 1000 then numberToEo division ++ (if divisor > 100 then " " else "") else "")

++ token

++ (if divisor > 1000 && division > 1 then "j" else "")

++ (if remainder > 0 then " " ++ numberToEo remainder else "")

where (division, remainder) = divMod n divisorHe first constructs a list of all the named powers of ten (i.e. 10, 100, 1000, 106, 109) and their corresponding strings and then uses it to recursively strip off a multiple of the biggest suitable number. Handling all the special cases cost him a lot of tokens though, since ‘dek’ and ‘cent’ behave differently from ‘mil’, and the ‘milionoj’ etc. behave differently still.

Tobias Schwarz (164 tokens) was the first to employ a lookup table to save tokens. The trick is that a string literal is just one token, and you can cram a lot of data into a single string.

str = "nul unu du tri kvar kvin ses sep ok nau dek dek_unu dek_du […] naucent_naudek_ses naucent_naudek_sep naucent_naudek_ok naucent_naudek_nau"

pStrs = "mil mil miliono milionoj miliardo miliardoj duiliono […] dekiliono dekilionoj dekiliardo dekiliardoj"

trip :: Integer -> Bool -> String

trip n withOne

| n == 0 || (n == 1 && not withOne) = ""

| otherwise = map repl (words str !! fromInteger n)

where

repl '_' = ' '

repl x = x

nPotStr :: Integer -> Integer -> String

nPotStr x n

| n * x == 0 = ""

| otherwise = words pStrs !! fromInteger (n `div` 3 * 2 - 2 + min (x - 1) 1) ++ " "

wOne :: Integer -> Bool

wOne x = x /= 3

pot :: Integer -> Integer -> String

pot n p

| n < (10 ^ p) = ""

| otherwise = pot n (p + 3) ++ trip x (wOne p) ++ take (fromInteger (if wOne p then x else x - 1)) " " ++ nPotStr x p

where

x = n `div` (10 ^ p) `mod` 1000

numberToEo :: Integer -> String

numberToEo 0 = "nul"

numberToEo n = init (pot n 0)The string literals that encode the lookup table were abbreviated with […] here because they are very big and not very interesting.

Herr Schwarz could have saved quite a few more tokens fairly easily, e.g. by using genericTake instead of take (fromInteger …) (and similarly for !!), by getting rid of the function wOne, by using '\n' and ' ' as separators in his lookup table instead of ' ' and '_'. Perhaps he thought the clever lookup table would be enough by itself? Unfotunately not. The Wettbewerb is fierce!

Manuel Pietsch (154 tokens) uses a very different approach: he uses Haskell’s show function to convert the input number to a string and then recurses over that string:

--split the number into digits and feed the reverse to listToString, the concatenate the words

numberToEo :: Integer -> [Char]

numberToEo 0 = "nul" --handle special case

numberToEo num = unwords . listToString 0 . reverse $ read . return <$> show num

--handle a list of digits three at a time

listToString :: Integer -> [Integer] -> [[Char]]

listToString _ [] = mempty -- no digits -> no words

listToString 1 (1:0:0:xs) = listToString 2 xs ++ pure "mil" -- special case: only write mil instead of unu mil

listToString ending (a:b:c:xs) = succ ending `listToString` xs ++ withEndingToString c "cent" ++ withEndingToString b "dek" ++ withEndingToString a "" ++ endingToString ending (a+2* ( b+c))

listToString ending xs = listToString ending $ xs ++ pure 0 -- fill with 0 if no triple left

endingToString :: Integer -> Integer -> [[Char]]

endingToString 0 _ = mempty -- keine endung bei 1

endingToString _ 0 = mempty -- keine Endung wenn alle 0

endingToString num 1 = pure $ words "ignore mil miliono miliardo duiliono duiliardo triiliono triiliardo kvariliono kvariliardo kviniliono kviniliardo sesiliono sesiliardo sepiliono sepiliardo okiliono okiliardo nauiliono nauiliardo dekiliono dekiliardo" `genericIndex` num

endingToString num _ = pure $ words "ignore mil milionoj miliardoj duilionoj duiliardoj triilionoj triiliardoj kvarilionoj kvariliardoj kvinilionoj kviniliardoj sesilionoj sesiliardoj sepilionoj sepiliardoj okilionoj okiliardoj nauilionoj nauiliardoj dekilionoj dekiliardoj"`genericIndex` num

withEndingToString :: Integer -> [Char] -> [[Char]]

withEndingToString 0 _ = mempty -- digit zero -> no word

withEndingToString 1 "" = pure "unu" -- special case 1 in einerstelle

withEndingToString 1 ending = pure ending -- specialcase 1 keine zahl, nur endung

withEndingToString number ending = pure $ digitToEo number <> ending He also employs two lookup tables (although much tamer ones) to generate the ‘-ilionoj’ / ‘-iliardoj’ names. He also used a few more tricks, such as writing mempty instead of [] (using the fact that lists form a monoid) and writing return x (or pure x) instead of [x] (using the fact that lists form an applicative functor). Don’t worry, none of this will really appear in our lecture!

Dan Lionis (107 tokens, a respectable 9th place) also used some reasonably-sized lookup tables to great effect:

numberToEo :: Integer -> String

numberToEo 0 = "nul"

numberToEo n = unwords . filter (/= ".") $ helper n 0

helper :: Integer -> Integer -> [String]

helper 0 _ = []

helper n e =

helper (div n 10) (succ e)

++ [digit e $ mod n 1000, thousandSuffix e $ mod n 1000]

digit :: Integer -> Integer -> String

digit 3 1 = "."

digit e n = genericIndex allDigits $ 10 * mod e 3 + mod n 10

allDigits :: [String]

allDigits = words ". unu du tri kvar kvin ses sep ok nau . dek dudek tridek kvardek kvindek sesdek sepdek okdek naudek . cent ducent tricent kvarcent kvincent sescent sepcent okcent naucent"

allSuffixe :: [String]

allSuffixe = words ". . . . . . mil mil . . . . miliono milionoj . . . . miliardo miliardoj . . . . duiliono duilionoj . . . . duiliardo duiliardoj . . . . triiliono triilionoj . . . . triiliardo triiliardoj . . . . kvariliono kvarilionoj . . . . kvariliardo kvariliardoj . . . . kviniliono kvinilionoj . . . . kviniliardo kviniliardoj . . . . sesiliono sesilionoj . . . . sesiliardo sesiliardoj . . . . sepiliono sepilionoj . . . . sepiliardo sepiliardoj . . . . okiliono okilionoj . . . . okiliardo okiliardoj . . . . nauiliono nauilionoj . . . . nauiliardo nauiliardoj . . . . dekiliono dekilionoj . . . . dekiliardo dekiliardoj . . . ."

thousandSuffix :: Integer -> Integer -> String

thousandSuffix _ 0 = "." -- group is 0 => no suffix

thousandSuffix e group = genericIndex allSuffixe (e * 2 + min 1 (div group 2))Simon Longhao Ouyang (78 tokens, 6th place) goes big again:

trimL :: String -> [String]

trimL "" = []

trimL s = if last s == ' ' then trimL $ init s else [s]

nE :: Integer -> Integer -> [String]

nE 0 _ = trimL ""

nE n p = nE (n `div` 1000) (p + 1) ++ (trimL . take 34 $ drop (fromIntegral $ n `mod` 1000 * 34 + p * 34000) " unu du tri […] naucent naudek ok dekiliardoj naucent naudek nau dekiliardoj ")

numberToEo :: Integer -> String

numberToEo 0 = "nul"

numberToEo n = unwords $ nE n 0He was the first one who had the idea to not just save the numbers between 0 and 999 in a lookup table, but also every possible suffix, i.e. the table contains every number of the form \(a \cdot 1000^b\) for \(a\in[0;1000)\) and \(b\in[0;21]\).

A much shorter version of this was submitted by Florian Hübler (38 tokens, 2nd place):

threeDigits = lines "\n unu\n du\n tri\n kvar\n kvin\n[…]naucent naudek nau dekiliardoj"

numberToEo::Integer->String

numberToEo 0 = "nul"

numberToEo x = tail $ concat [ threeDigits `genericIndex` (n * 1000 + x `div` 1000^n `mod` 1000) | n <- enumFromThenTo 21 20 0]As he noted himself, one could save one more token by inlining the threeDigits function, but performance suffers a lot with repeated calls to numberToEo: as it is written now, the call lines "\n unu[…]" is evaluated just once, but after inlining, it would be evaluated every time numberToEo is called. And this is a very big string.

Malte Schmitz, probably having sold his soul to a demon, managed to go 10 tokens lower than that, catapulting him into first place with the following solution:

{-# LANGUAGE OverloadedStrings #-}

import qualified Data.Map as Map

import Data.Text (Text, chunksOf, pack, unpack)

-- This is my current solution for "Number To Esperanto", in 28 tokens.

-- I hereby license all code below this statement as follows:

--

-- MIT License

-- Copyright (c) 2020 Malte Schmitz

--

-- Permission is hereby granted, free of charge, to any person obtaining a copy

-- of this software and associated documentation files (the "Software"), to deal

-- in the Software without restriction, including without limitation the rights

-- to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

-- copies of the Software, and to permit persons to whom the Software is

-- furnished to do so, subject to the following conditions:

--

-- The above copyright notice and this permission notice shall be included in all

-- copies or substantial portions of the Software.

--

-- THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

-- IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

-- FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

-- AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

-- LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

-- OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

-- SOFTWARE.

-- This is the magic table. Its a list of maps.

-- Each list member holds conversions for one 3-number pack. index 0 contains representations for 0-999,

-- index 1 contains those for 1-999 * 1000, and so on.

-- Each map maps from the reversed string representation of the 3-pack (e.g. 125 = "521") to

-- the correct string. The table is pregenerated by the auxiliary function in the comment above.

-- To account for the "nul", we also map special 2-pack and 1-packs (e.g. "000" = "", but "0" = "nul")

table :: [Map.Map Text [String]]

table = read "[fromList [(\"0\",[\"nul\"]),(\"00\",[]),(\"000\",[]),(\"001\",[\"cent\"]),(\"002\",[\"ducent\"]),(\"003\",[\"tricent\"]),(\"004\",[\"kvarcent\"]), …], …, fromList […, (\"999\",[\"naucent naudek nau dekiliardoj\"])]]"

-- The main conversion function. Read comments from bottom to top for consistent explanation.

numberToEo :: Integer -> String

numberToEo n =

unwords -- Finally, concatenate the array and insert spaces in between.

$ concat -- There can be empty lookups, e.g. any "000" in the middle of the number.

-- To not introduce multiple spaces, we return arrays with a single member

-- and concat them here.

$ reverse -- The result is still reversed, so we need to unreverse it

$ zipWith (Map.!) table -- Look up each chunk in the corresponding map from the table.

-- This is the ultra magic. Keys are saved in reverse so we don't have to

-- unreverse them here, and zipWith automatically matches only as many keys

-- as the smaller list contains.

$ chunksOf 3 -- Split into chunks of 3, e.g. "0987654321" = ["098", "765", "432", "1"]

$ pack -- Create Text from String

$ reverse -- Reverse it, so when it is split into groups of 3 later on, we start making

-- groups of 3 starting at the least significant part of the number

$ show n -- Convert n to stringFor some reason, he deemed it necessary to force the MC Sr to include this massive licencing statement (perhaps the demon told him to do that?). Strange, but the MC Sr supposes when you win the Wettbewerb with a distance of 10 tokens to the runner-up and put the MC himself to shame, you are entitled to some eccentricities. The general approach of Herr Schmitz is quite similar to the more advanced lookup tables we have seen before (cf. e.g. Ouyang and Hübler). He combines a number of tricks that others used as well:

the advanced lookup table (cf. e.g. the Herren Ouyang and Hübler) that contains all numbers of the form \(a\cdot 1000^b\)

methods from

Data.Text(here the important one ischunksOf)using

showto convert the input number into a string He was, however, the only one to combine all of these, and he added a few more unique tricks:He uses Haskell’s read function (which is more or less the inverse to show) to store pretty much any Haskell data in a string (this means that you can represent any constant as just two tokens).

The lookup table does not map numbers to strings, but strings of up to three digits to strings. This means that he can deal with all those pesky special cases like (e.g. that ‘0’ is ‘nul’, but ‘1000’ is ‘mil’ and not ‘mil nul’) in the lookup table, which costs no tokens.

His lookup table is a list of maps (one map for each power of 1000), which plays together nicely with his use of the

zipWithfunction.The maps return not a single string, but a list of strings. The advantage of this is that it allows him to drop triplets like

000completely.

As impressive as this is, the MC Sr is a bit disappointed that Mr Schmitz did not go any further. Perhaps he only consulted a lesser demon, because one could have shortened this solution to 25 tokens:

table :: [[(Text, String)]]

table = read "[[(\"0\",\"nul\"),(\"001\",\"cent\"),(\"002\",\"ducent\"),(\"003\",\"tricent\"),…], [(\"001\",\"cent mil\"),(\"002\",\"ducent mil\"),(\"003\",\"tricent mil\"),…], …]"

numberToEo :: Integer -> String

numberToEo =

unwords <<< catMaybes <<< reverse <<< flip lookup `zipWith` table <<< chunksOf 3 <<< pack <<< reverse <<< showThe trick here is to just use association lists instead of map, and triplets like 000 are just not in it. If the <<< confuses you, that is just another way to write . (function composition) that has a more suitable operator precedence than the . operator.

So what was the MC Sr’s solution? Well, frankly, his solutions do not have any significant tricks that we haven’t seen from the contestants above, and they do not compare favourably in terms of brevity either. He had thought that going up to 1066-1 would be enough to preclude big lookup tables and he failed to think of the clever approach of storing chunks of three digits in the table, so his shortest original solution only used a small lookup table for the ‘-ilionoj’ / ‘-iliardoj’ and has 154 tokens, which would have tied him with his name twin Herr Pietsch in 12th place.

whenGt :: Integer -> Integer -> [a] -> [a]

whenGt a b = filter $ const $ a > b

numberToEoBasic :: Integer -> String

numberToEoBasic n

| n < 10 = digitToEo n

| n < 100 = aux 10 "dek"

| n < 1000 = aux 100 "cent"

| otherwise = aux 1000 $ whenGt n 1999 " " ++ "mil"

where aux base baseString = whenGt q 1 (numberToEoBasic q) ++ baseString ++ whenGt r 0 (" " ++ numberToEoBasic r)

where (q, r) = quotRem n base

myList :: [(Integer, String)]

myList = read "[(21,\"dekiliardo\"),(20,\"dekiliono\"),(19,\"nauiliardo\"),(18,\"nauiliono\"),(17,\"okiliardo\"),(16,\"okiliono\"),(15,\"sepiliardo\"),(14,\"sepiliono\"),(13,\"sesiliardo\"),(12,\"sesiliono\"),(11,\"kviniliardo\"),(10,\"kviniliono\"),(9,\"kvariliardo\"),(8,\"kvariliono\"),(7,\"triiliardo\"),(6,\"triiliono\"),(5,\"duiliardo\"),(4,\"duiliono\"),(3,\"miliardo\"),(2,\"miliono\")]"

aux :: Integer -> (Integer, String) -> (Integer, [String])

aux n z = (mod n b, whenGt q 0 $ numberToEoBasic q : [snd z ++ whenGt q 1 "j"])

where q = div n b

b = 1000 ^ fst z

numberToEo :: Integer -> String

numberToEo 0 = "nul"

numberToEo n = unwords $ concat ss ++ whenGt n' 0 [numberToEoBasic n']

where (n', ss) = mapAccumL aux n myListSo what have we learnt this week?

- Only about 14 % of our students have the time and motivation to spare to participate in our venerable Wettbewerb.

- Only about two thirds of these actually submitted correct code.

- In a few cases, what correct means was perhaps a bit muddy. So do check

PiazzaZulip for clarifications, or ask a question there yourself (if you are positive your question is not already answered in the problem statement. There were a lot of such questions as well…) - The MC Sr was sorely and definitively beaten in the first week already. Is he perhaps getting old and losing his edge? Alas, it must be so!

Should this exercise have instilled you with a great urge to learn Esperanto, the MC Sr can very much recommend the free course on Duolingo (that is where he learnt it). He also heard good things about the course from lernu!.

Otherwise, rest assured that the next few weeks of FPV will be Esperanto-free. Promesite!

Note: Should you have ≤ 296 tokens (after moving any relevant code from outside the {-WETT-} tags inside, if needed) and not appear in the Top 30 of this week, you most probably submitted an incorrect solution. If you are convinced your solution was correct, feel free to take it up with the MC Sr via Zulip or email and he will look into it.

Update: One student’s submission was overlooked due to the MC Sr’s clumsinesstechnical issues and was retroactively inserted into the ranking, with appropriate points awarded. Additionally, one of the three students who were disqualified for printing 1,001,000 as ‘unu miliono unu mil’ petitioned the MC Sr to overthink his stance on the issue, and he did. It does seem a bit cruel to award 0 points to those three students, so they were undisqualified now. In the future, the Council of MCs should try to communicate such clarifications more effectively in the Wettbewerb announcement topic on Zulip.

Retroactive insertions into the ranking are marked with ❗. Note that the remaining students’ scores were not altered, so the Top 30 this week contains 34 students now.

Final Scores Week 1

| Rank | Name | Points | Tokens |

|---|---|---|---|

| 🥇 | Malte Schmitz | 30 | 28 |

| 🥈 | Florian Hübler | 29 | 38 |

| 🥉 | Florian Weiser | 28 | 39 |

| 4. | Stefan Betz | 27 | 45 |

| 5. | Julian Pritzi | 26 | 73 |

| 6. | Simon Longhao Ouyang | 25 | 78 |

| 7. | Martin Lambeck | 24 | 89 |

| Benjamin Defant | 24 | 89 | |

| 9. | Dan Lionis | 22 | 107 |

| 10. | Vitus Hofmeier | 21 | 136 |

| 11. | Simon Kammermeier | 20 | 137 |

| 12. | Manuel Pietsch | 19 | 154 |

| ❗ | Felix Zehetbauer | 18 | 161 |

| 13. | Tobias Schwarz | 18 | 164 |

| ❗ | Maren Biltzinger | 17 | 166 |

| 14. | Max Lang | 17 | 169 |

| 15. | Robert Imschweiler | 16 | 172 |

| 16. | Noah Dormann | 15 | 176 |

| 17. | Nils Cremer | 14 | 194 |

| 18. | Rafael Brandmaier | 13 | 197 |

| 19. | Flavio Principato | 12 | 203 |

| 20. | Dominik Weinzierl | 11 | 211 |

| 21. | Jakub Stastny | 10 | 217 |

| ❗ | Rebecca Ghidini | 9 | 222 |

| 22. | Salim Hertelli | 9 | 236 |

| 23. | Luis Bahners | 8 | 240 |

| 24. | Alejandro Tang Ching | 7 | 243 |

| 25. | Victor Pacyna | 6 | 251 |

| 26. | Nils Harmsen | 5 | 253 |

| ❗ | Kilian Mio | 4 | 278 |

| 27. | Adrian Reuter | 4 | 287 |

| 28. | Cara Dickmann | 3 | 291 |

| 29. | Ata Keskin | 2 | 294 |

| 30. | Julian Sikora | 1 | 296 |

Week 2 (updated 26/11/2020)

Hello everyone, this is the MC Sr. He took his time with this week’s evaluation (or rather with writing the blog post – the evaluation was actually done within a day after the deadline). A brief glance reassured the MC Sr that this week, nobody submitted any outrageous lookup tables. Phew.

There were 513 submissions this week, and the MC Sr subjected them to quite the barrage of tests. Except for the last round, all tests were quickcheck tests comparing the student solution to the sample solution (modulo duplication and permutation of elements). To ensure fairness, a fixed RNG seed was used by calling QuickCheck with quickCheckWithResult (stdArgs {replay = Just (mkQCGen 42, 0)}), so that all students got the exact same set of test inputs. If you are curious, you can look at the code the MC Sr used to run the test, including the QuickCheck generators for tournaments. A few students were tied in the end, so the MC broke the ties in favour of the student with the faster solution (so long as the difference was more than 10 %; otherwise he just left them tied).

The first round of tests consisted of 250 inputs tournaments with sizes between 1 and 10 and a timeout of 5 seconds. Out of the 513 submissions, 242 passed, with the slowest ones taking about 0.5 seconds.

Next, the MC Sr tested the remaining 242 submissions on a single tournament of size 30 and a generous timeout of 20 seconds. This was done in order to detect submissions that definitely have exponential running time (more than 20 seconds for size 30 is far worse than what was required in the problem statement). Of the 242 submissions, 180 timed out. The remaining 62 terminated in less than 0.6 seconds, which shows that the separation we got here is quite dramatic. Although he did not actually check, the MC Sr is certain that the offender here is always the implementation of TC, since a super-polynomial implementation of the other two is difficult to accomplish.

Next, the MC Sr wanted to make sure the remaining competitions really do perform well on bigger examples. He had said on Zulip that he would not disqualify any submissions due to timeout as long as they terminated within a second on examples of size 50, so that’s exactly what he tested next: one single input of size 50. All but 7 submissions terminated in less than 0.06 seconds; two more took 0.25, 0.56, and 1.27 seconds. The MC Sr decided to be generous and refrained from disqualifying the last one since it was close. The other three submissions, however, took more than 10 seconds, which is clearly too much (the MC Sr is an impatient man!).

In any case, only one of them would have ended up in the Top 30 by virtue of tokens anyway. That one is by Herr Markus (72 tokens). Sadly, it appears that Herr Markus and the two others were a bit unlucky when they benchmarked their solutions: on the test data the MC Sr published on Zulip, their solutions terminate within fractions of seconds. On the test input generated by QuickCheck, on the other hand, they take very long (for reasons discussed below) and must thus be disqualified If you do not believe the MC Sr, you can find the problematic tournament of size 50 here.

To make sure all the remaining 58 submissions are really correct, the MC Sr then conducted another round with 105 inputs of size 1–20 and then all tournaments of size up to 7 and 105 tournaments of size 8 (a tournament of size n consists of \(n(n-1)/2\) matches, each of which can go either way, so that there are \(2^{n(n-1)/2}\) tournaments of size \(n\)). To make sure that no slow solution mistakenly found its way through the previous tests due to a similar fluke as the one that made the solutions by Herr Markus et al. so fast on the public test data, the MC Sr then ran another round of 250 tests on random tournaments of size 50. None of this testing kicked out any submissions, so the MC Sr was satisfied.

After examining the Top 30 solutions by hand, the MC Sr noticed the suspicious-looking line iterate gen (uncoveredSet tournament) !! 16 in the submission by last week’s winner, Herr Schmitz. Next to it was a comment saying ‘This should be stable for any reasonable input length’. Well, unfortunately, this is not how we roll here in the FPV lecture. The MC Sr quickly produced a counterexample with 126 players on which Herr Schmitz’s solution gives an incorrect result. Herr Schmitz may argue that this is ‘unreasonably large’, but the MC Sr has been involved in Super Smash Bros. Melee™ tournaments with more than 300 contestants. Melee players tend to get very belligerent about the rules according to which they get ranked even when you do it correctly, so the MC Sr would rather not take any chances with respect to correctness. Luckily, Herr Schmitz had an alternative, longer (but correct) solution commented out, so the MC Sr just took that one instead (that did cost him 2 places in the ranking though).

So, let’s move on to the actual solutions:

Copeland winners

The Musterlösung has 32 tokens and looks like this:

copeland tournament = [i | i <- players tournament, length (dominion tournament i) == l]

where l = maximum [length xs | xs <- tournament]Frau Hwang has the following somewhat streamlined version of this in 23 tokens:

copeland tournament = filter ((== maximum (map length tournament)) . length . dominion tournament) $ players tournamentFrau Lin’s solution (21 tokens) stands for many similar ones that made use of the elemIndices function (with all indices shifted by 1 because the first player is 1, not 0):

copeland tournament = map (+1) $ elemIndices (maximum wins) wins

where wins = map length tournamentHerr Kammermeier has the shortest solution with 12 tokens, using the same basic approach:

copeland = map succ <<< flip elemIndices <*> maximum <<< map lengthThe main trick here is the (<*>) operator from Control.Applicative, which in this particular instance fulfils (f <*> g) x = f x (g x), so flip elemIndices <*> maximum is just a shorter way of writing \xs -> elemIndices (maximum xs) xs.

A few people implemented a variant of this where instead of incrementing all the elements in the list returned by elemIndices they prepend a dummy element to the list of lengths that is guaranteed to be smaller than all the other lengths. Max Lang (15 tokens), for instance, used minBound (which is the smallest representable value of type Int):

copeland tournament = maximum dominionSizes `elemIndices` dominionSizes

where dominionSizes = minBound : map length tournamentSome other people used -1 instead, but minBound is only one token whereas -1 is two. Note that this only works if the tournament is non-empty (which was assured by the MC Sr on Zulip).

Nils Harmsen found a very different approach (16 tokens) using a module the MC Sr had never seen before: non-empty lists.

import qualified Data.List.NonEmpty as NE

copeland tournament = NE.toList $ last $ (length . dominion tournament) `NE.groupAllWith` players tournamentThe MC Sr’s solution has 12 tokens (the same number as Herr Kammermeier) and uses the same approach with similar magic from the reader monad:

copeland tournament = maximum >>= elemIndices $ minBound : map length tournamentThe Uncovered Set

The Musterlösung looked like this (38 tokens):

uncoveredSet tournament = [x | x <- ps, uncovered x]

where ps = players tournament

uncovered x = null [y | y <- ps, y /= x, covers tournament y x]Herr Vitus Hofmeier basically has a shorter version of that in 30 tokens:

uncoveredSet tournament = [i | i <- players tournament, null [j | j <- players tournament, j /= i, covers tournament j i]]Frau Lin has a very odd variant of this (28 tokens) that uses topCycle instead of players.

uncoveredSet tournament = topCycle tournament \\ [ x | x <- topCycle tournament, y <- topCycle tournament, x /= y , covers tournament y x]The MC Sr is not sure why she does that (it doesn’t save any tokens), but it doesn’t matter – it works (since \(\textrm{UC}\subseteq\textrm{TC}\) and if a player is in TC, every player that covers them must also be in TC).

Herr Nanouche went down to 27 tokens, using the length to take care of the annoying fact that every player is covered by himself:

uncoveredSet tournament = f `filter` players tournament

where f x = length [player | player <- players tournament, covers tournament player x] == 1Herr Harmsen has the following dodgy 26-token solution:

uncoveredSet tournament = [x | x <- players tournament, not $ or [covers tournament y x | y <- players tournament]]This only works because he defined covers such that covers t i i = False, which is actually wrong according to the Aufgabenstellung. Unfortunately, the MC Sr forgot to test this case in QuickCheck so that Herr Harmsen did not notice. As a compromise, the MC Sr decided not to disqualify him, but to instead assign 3 penalty tokens to him (corresponding to the shortest straightforward fix to his solution with a correct version of covers).

Herr Lang has a ‘true’ 26-token solution:

uncoveredSet tournament =

players tournament \\ [x | x <- players tournament, y <- x `delete` players tournament, covers tournament y x]The shortest student solution is due to Herr Schmidmeier (23 tokens):

uncoveredSet tournament = pl \\ allCovered `concatMap` pl

where allCovered x = covers tournament x `filter` delete x pl

pl = players tournamentThe MC Sr has a 18-token solution that follows a very different approach (it does not even use ‘covers’):

uncoveredSet tournament = map succ $ elemIndices mempty $ tail . flip filter tournament . isSubsequenceOf <$> tournamentWhen writing this blog post, he had no recollection of writing this code and could not remember how it worked at first. Perhaps there was some demonic involvement at work here as well? Anyway, after some fiddling with GHCI, he figured it out again: isSubsequenceOf <$> tournament builds a list \([f_1,\ldots,f_n]\) of functions such that \(f_i\) takes a list and returns True iff \(D(i)\) is a subset of that list. We then apply flip filter tournament to this list, which essentially gives us a list that tells us for each player which other players have a dominion that is a superset of theirs (i.e. which players cover them). The uncovered players are then simply those for which this list is a singleton (i.e. it becomes empty when applying tail to it).

The Top Cycle

The obvious algorithm for TC hinted at on the Aufgabenblatt is to simply implement the definition of TC: enumerate all non-empty sets of players, filter out all the non-dominant ones, and take the smallest one of these. This algorithm is obviously correct, but it also obviously has exponential running time (which is not going to cut it with an input of size 50). Some minor optimisations of this approach are possible (e.g. generate subsets in ascending order and take the first dominant one), but the worst-case running time will still be exponential.

The key insight in the MC Sr’s solution (and the same path that most students took as well) is that TC can be built up iteratively (an approach that is very common for computing things that are defined as ‘the smallest set such that $foo’):

- Suppose we have some non-empty set \(X_0\) that we know to be a subset of \(\textrm{TC}\). This will be the invariant that we will maintain.

- If we have a non-empty subset \(X_i\subseteq\textrm{TC}\), we know that \(\bar D(X_i) \subseteq \textrm{TC}\) as well, so we can set \(X_{i+1} := X_i \cup \bar D(X_i)\) and have \(X' \subseteq \textrm{TC}\), and of course \(X'\neq\emptyset\).

- Since there are only finitely many players and the size of \(X_i\) only ever increases, we will reach a point when \(X_i\) does not change anymore, i.e. \(\bar D(X_i)\subseteq X_i\). This is of course precisely the definition of a dominant set.

So we have obtained a non-empty dominant set \(X_i\) that is a subset of the top cycle, and since the top cycle is the smallest such set, we have \(X_i = \textrm{TC}\).

The only remaining question now is what \(X_0\) should be. There are a couple of possible choices:

- non-deterministically choose some player \(x\) and start with \(X_0 = \{x\}\), then choose the smallest of the resulting \(n\) sets

- any uncovered player must also be part of any dominant set (because any member \(x\) of a dominant set \(X\) covers all \(y\notin X\)).

- any Copeland winner (i.e. a player who has at least as many wins as every other player) must be part of any dominant set (i.e. also of TC).

In particular, CO and UC work as a value for \(X_0\). In fact, we have \(\textrm{CO} \subseteq\textrm{UC} \subseteq\textrm{TC}\). Herr Hübler even included a short informal proof in his comments. In practice, CO is probably the best choice because it is the fastest to compute. However, the choices only differ by a small polynomial overhead, which does not matter much for our purposes.

Some students simply iterated until no further changes occur. Some iterate until the resulting set is dominant. Others (like the MC Sr) just performed n steps (where n is the number of players): since the output set has at most n elements, it must stabilise after at most n steps.

The 3 submissions that were disqualified due to timeout earlier also took this iterative approach (well, at least 2 of them. The MC Sr is not sure about how the third solution works – it is quite long). However, they made the mistake of adding every dominator of every element in the set in every step, even if that dominator was already in the set. When the algorithm needs few iterations, this can work out all right, but the list can reach exponential length in the worst case (e.g. in one case, an input of size 50 leads to an output of length 3,481,436).

Like the MC Sr, ten out of of the Top 30 students used CO as the initial set (code name CO in the ranking) and 8 more used UC (code name UC), e.g. the following 35-token solution due to Herr Defant:

topCycle tournament = tournament `helper2` length tournament

helper2 :: [[Int]] -> Int -> [Int]

helper2 tournament 0 = copeland tournament

helper2 tournament i = xs `union` concatMap (tournament `dominators`) xs

where

xs = helper2 tournament (i -1)One student (Herr Pietsch, 51 tokens) took the non-deterministic approach (code name [x]):

topCycle tournament = shortest $ Set.toList . until (extension Set.isSubsetOf) (extension Set.union) . Set.singleton <$> players tournament

where extension f g = f (Set.unions $ Set.map (dominatorSets !! ) g) g

dominatorSets = Set.empty: (Set.fromList . dominators tournament <$> players tournament)Two more used only the first element of CO (code name head CO), e.g. Herr Hübler (38 tokens):

topCycle tournament = addToDZHK tournament mempty [head $ copeland tournament]

addToDZHK :: [[Int]] -> [Int] -> [Int] -> [Int]

addToDZHK tournament oldDZHK currDZHK =

if oldDZHK == currDZHK then oldDZHK

else addToDZHK tournament currDZHK $ nub $ currDZHK ++ concatMap (dominators tournament) currDZHKThis is somewhat puzzling, as it actually slightly slower and needs more tokens than just taking all of CO.

8 other students used a very different iterative approach (code name: desc dom): they simply sorted the players in descending order by the sizes of their dominions and then added them one after another until reaching a dominant set, cf. e.g. the following 23-token solution by Herr Lang.

topCycle tournament = last $ filter (dominant tournament) $ tails $ sortOn (length . dominion tournament) $ players tournamentHerr Lang even included a two-page PDF proof showing that this works (including an introduction and a bibliography!), which the MC Sr found very charming. The MC Sr briefly considered whether to hand out bonus points but decided against it. Instead, he awards Herr Lang with an academic hat emoji 🎓 to honour his academic fervour. Actually, let’s give one to Herr Hübler for the short proof in his comments as well 🎓.

Herr Schmidmeier has an interesting 20-token twist on UC:

topCycle tournament = until (dominant tournament) (nub . (dominators tournament `concatMap`)) $ uncoveredSet tournamentHe does not use \(X_{i+1} := X_i \cup \bar D(X_i)\), but rather \(X_{i+1} := \bar D(X_i)\). The MC Sr does not know why this terminates, but apparently it works. If Herr Schmidmeier has a proof for the termination of his function, the MC Sr would be very much interested in seeing it (in return for a 🎓 emoji). Alternatively, if anybody can offer up a counterexample where this does not terminate, they will be awarded a 🎓 as well.

Herr Bahners used a very different approach (code name: SCC, 26 tokens): he used Haskell’s Data.Graph module (which is in base, to the MC Sr’s astonishment) to compute the strongly-connected components of the tournament graph, the biggest one of which (by topological ordering) is the top cycle (hence also presumably its name).

import Data.Graph

topCycle tournament = flattenSCC (last (stronglyConnComp [(x,x,dominion tournament x) | x <- players tournament]))The MC Sr’s solution (CO, 18 tokens) is again deeply cryptic:

topCycle = concatMap snd . ap zip (iterate . fmap nub . concatMap . dominators <*> copeland)Again, it took the MC Sr quite some time to reverse-engineer what he had done here. A deobfuscated version of the implementation is this:

topCycle = concatMap snd (zip xs (iterate (\ys -> nub (concatMap (dominators xs) ys)) (copeland xs)))The iterate produces an infinite list beginning with the set CO, then all dominators of any player in CO, then all dominators of these etc. Zipping them with the original list xs then takes the first n elements of that infinite sequence, which are then simply concatenated to produce the final result.

Conclusion

The MC Sr was very pleased with the creativity of the students this week. He did not expect that many students to figure out the iterative solution and was pleasantly surprised – even more so to find other creative approaches like the one with SCCs or the one taking increasing prefixes of the ranking by dominion size. He was also very happy that none of the serious submissions had any problems with correctness (as far as he could tell), apart perhaps from Herr Schmitz’s close call – which was even fully intentional, tut tut! Don’t listen to the demons too much, Herr Schmitz.

What did we learn this week?

- What a refreshing week! The MC Sr actually learnt a few new things from the student submissions. He always enjoys when a submission surprises him.

- That said, the MC Sr does not enjoy when a submission surprises him by being sneakily incorrect. Some students like to play dangerous games with correctness and the MC Sr took a mental note to watch them closely in the future (no names will be mentioned because that would be unfair to Herr Schmitz). The MC Sr will have none of it – correctness is paramount!

- Choice Theory makes for some interesting programming tasks. In fact, if this made you curious, you should absolutely check out Prof. Brandt’s lecture Computational Social Choice whenever it gets offered again (Prof. Brandt is probably too busy teaching Diskrete Strukturen at the moment – the MC Sr does not envy him!) Well, in the mean time, why not look at Algorithmic Game Theory instead?

- In something of a foreshadowing of Week 5, some students submitted (unsolicited!) proofs this week. If you’re eager to prove things, the Chair for Logic and Verification might be for you! We have some lovely lectures called Functional Data Structures and Semantics where you get to prove things with a cutting edge Proof Assistant called Isabelle. It’s basically a computer game. 😉 This is how Prof. Nipkow caught the MC Sr back in the day. It is very addictive!

- The MC Sr is a very

lazybusy man and sometimes things just take a while. 🙂 - The MC Sr is getting so old by now that he keeps forgetting how his own solutions work. Perhaps he should write his blog posts in a more timely fashion in the future so that he can still remember how they work.

Update: Herr Hübler responded to the MC Sr’s challenge and proved the correctness of Herr Schmidmeier’s algorithm (PDF). He will thus be awarded a second 🎓 emoji (he seems to really covet these). The basic idea is that if TC ≠ CO, there is an element \(x\in\textrm{CO}\) that lies on cycles of length 3 and 4 and will thus be present in every iteration beyond the 6th as any number ≥ 6 is a sum of multiples of 3 and 4. Since every element of TC is reachable from that \(x\) in at most \(n\) steps, we get all of TC in at most \(n+6\) steps.

To be sure that the proof is correct, the MC Sr formalised it in his favourite proof assistant Isabelle. For those of you that are interested, you can look at the Isabelle file or at the PDF document generated from it (the interesting bits are in the ‘Main proof’ section, starting at page 9).

Final Scores Week 2

| Rank | Name | Approach | Tokens | Points |

|---|---|---|---|---|

| 🥇 | Severin Schmidmeier | UC |

58 | 30 |

| 🥇 | Simon Kammermeier | CO |

58 | 30 |

| 🥉 | Florian Weiser | desc dom |

63 | 28 |

| 4. | Max Lang 🎓 | desc dom |

66 | 27 |

| 5. | Max Schröder | desc dom |

67 | 26 |

| 6. | Nils Imdahl | desc dom |

67 | 25 |

| 7. | Janin Chaib | UC |

69 | 24 |

| 8. | Armin Ettenhofer | desc dom |

70 | 23 |

| 9. | Felix Zehetbauer | CO |

71 | 22 |

| 10. | Nils Harmsen | UC |

70 + 3 | 21 |

| JiWoo Hwang | desc dom |

73 | 21 | |

| 12. | Adam Nanouche | CO |

74 | 19 |

| 13. | Dan Lionis | desc dom |

79 | 18 |

| 14. | Malte Schmitz | UC |

80 | 17 |

| 15. | Alexander Höhn | CO |

85 | 16 |

| 16. | Florian Hübler 🎓🎓 | head CO |

88 | 15 |

| 17. | Benjamin Defant | CO |

88 | 14 |

| 18. | Georg Kreuzmayr | CO |

89 | 13 |

| 19. | Luis Bahners | SCC |

92 | 12 |

| 20. | Christoph Rotte | UC |

93 | 11 |

| 21. | Selina Lin | CO |

94 | 10 |

| 22. | David Berger | head CO |

95 | 9 |

| 23. | Jalil Salamé Messina | CO |

96 | 8 |

| 24. | Philip Haitzer | UC |

97 | 7 |

| 25. | Paul Hofmeier | UC |

100 | 6 |

| 26. | Simon Longhao Ouyang | CO |

101 | 5 |

| 27. | Vitus Hofmeier | UC |

102 | 4 |

| 28. | Noel Chia | desc dom |

106 | 3 |

| 29. | Manuel Pietsch | [x] |

109 | 2 |

| 30. | Johannes Volk | head UC |

114 | 1 |

Week 3

The Odyssey

Ahoi! The last two weeks you had the honour to read a blog entry by the venerable MC Sr. Since the MC Sr has other important stuff to attend to, such as designing new Wettbewerb exercises, it fell unto the MC Jr Jr to take over the rudder and embark on the odyssey to evaluate this week’s Wettbewerb. And what an arduous journey it was: at first the MC Jr Jr had to take a dive into the arcane arts of bash scripting again, which included brushing up on knowledge about wonderful command line tools such as awk, paste, sort, and xsv. Normally, the MC Jr Jr would only look at bash scripts from a great distance with his binoculars. In this case, the MC Sr didn’t leave him a choice since he wrote the production-grade Wettbewerbsevaluierungssystem (WES™) as single bash script (the MC Jr Jr must note that the system is still easier to understand than last year’s system which was written in Haskell). The MC Jr Jr modified the system to fit the purposes of this week’s Wettbewerb and evaluated this week’s submissions. After going through the 30 best submissions, the MC Jr Jr was dismayed as there was no separation between remarkable and unremarkable submissions. In particular, the best solution only handled sudokus of size up to 9x9 correctly. The MC Jr Jr didn’t want to let that sail, so he decided to eliminate all solutions which couldn’t detect an invalid 32x32 sudoku or couldn’t solve a 32x32 sudoku with just one empty entry. He performed this sanity check and noticed that obviously incorrect solutions passed the tests. Investigating further, he noticed that ein Malheur had happened to him while modifying the MC Sr’s code: in many cases, the system had evaluated the model solution instead of the student’s submission. This forced the MC Jr Jr to evaluate all submissions again. On the plus side, he narrowly circumnavigated a great amount of embarrasment and managed to achieve a reasonable separation of remarkable submissions from unremarkable after all.

Evaluation

With the adventures surrounding the evaluation out of the way, let’s talk about the actual evaluation.

The MC Jr Jr considered all homework submissions with WETT tags that had a grade of at least 89% and passed the aforementioned sanity check, which left him with 210 submissions.

As the MC Jr Jr was lazy wanted to use realistic test cases he decided to use an existing data set to evaluate the submissions (see Test.hs).

This comes with the advantage that those are real sudokus with a unique solution.

The hardSudoku from the template, on the other hand, has multiple solutions and thus isn’t a real sudoku as a student pointed out to the MC Jr Jr.

The MC Jr Jr subjected the submissions to the following three files from the data set:

- Firstly, he set the submissions loose on the \(10^5\) sudokus in the file

puzzles0_kaggle. Apparently, this data set is used to train machine learning models to solve sudokus (e.g. see here) instead of using facts and logic?! Anyway, the MC Jr Jr eliminated those solutions that solved fewer than 60000 of those sudokus within 60 seconds. This includes the zipper model solution and a guest submission by Simon Hanssen with 7982 respectively 9015 solved sudokus. On the other hand, 42 submissions weathered this first storm with 19 of them even solving all sudokus. - Secondly, the MC Jr Jr chose the file

puzzles1_unbiasedwith \(10^6\) moderately difficult sudokus and gave the students 120 seconds. He then assigned points by rank in accordance with the usual Wettbewerb rules. Curiously, one submission by Herr Felix Zehetbauer didn’t solve some of those sudokus correctly so he was eliminated. - Lastly, the students were confronted with some hard sudokus from the file

puzzles4_forum_hardest_1905. This time the MC Jr Jr let them chooch for a generous 180 seconds; however, since predicting termination is a notoriously difficult (even undecidable) problem, he rudely interrupted after more than 500 seconds. In this case, the submission was awarded no points. Fortunately, only the MC Sr’s wildly complicated solution hits this limit, which means that this time he was bested by you, the students. - The final score is the sum of the scores from the latter two runs.

Solutions

While the captain MC Jr Jr rambles on and on about the (mis-)adventures of the evaluation, he catches wind of a planned mutany on account of it not being clear when the ship will reach its destination, if at all. To get back on course, he should really talk about the solutions now! And there are quite some things to talk about which will be split into three parts: micro-optimisations, data structures, and, most importantly, algorithmic tricks.

Micro-optimisation

The only substantial micro-optimistaion is due to the Chef Malte Schmitz who uses bitmasks to represent the numbers in a cell of the sudoku; that is, 00..01 represents a cell containing 1, 00.0100 a cell containing 3 and so on.

This allows one to check the constraints of the sudoku, i.e. distinctness of the numbers in each row, column, and square, using efficient bit fiddling operations such as setBit.

When using 64-bit Int, one has to assume that the sudoku has size at most 49x49 but the Chef was smart and checked the admissability of this assumption with the MC Jr Jr before the submission deadline.

While going through the sumbission of Herr Jonas Hübler, the MC Jr Jr’s thought that Herr Hübler may have fallen victim to one of the classical blunders because he used foldl instead of foldl'; alas, in this case that was a false alarm and there was no difference in performance when using foldl'.

As the non-strict foldl might degrade the performance due to build-up of thunks in the accumulator, the students should be wary of calls to foldl in the future.

Data Structures

The data structures used by submissions fall into two general categories: search trees and immutable arrays (apparently nobody dared to use mutable arrays within the ST monad).

For arrays, the two interesting modules are Data.Array and Data.Array.Unboxed where unboxed means that the values are stored directly as opposed to storing references, thereby improving performance by eliminating on level of indirection.

All in all, only two students from the top 30 used arrays, namely Jalil Messina and Eddie Groh.

Herr Eddie Groh even invested some time into writing {-# INLINE ... #-} notations for his functions but the MC Jr Jr isn’t sure if they helped much.

His submission also exhibits another interesting feature of arrays in Haskell, namely that you can use any type that is an instance of the Ix typeclass.

In particular, this allows him to use tuples as indices.

Search trees were more commonly used and came in the forms of Data.Map, Data.IntMap, and Data.Sequence which are all provided by the package containers.

The former two are basically the same, IntMap is just a specialised and more efficient version of Map for keys of type Int.

The latter module defines the type Seq which offers a list-like interface but basically uses an IntMap under the hood.

Consequently, all of the above data structures offer logarithmic-time random access to the elements which can be quite an improvement compared to the linear-time access of List.

For a summary of who used what, refer to the table below:

| (Int)Map, (Int)Set | Jalil Salamé Messina, Robert Imschweiler, Christoph Rotte, Florian Hübler |

| Seq | Malte Schmitz, Severin Schmidmeier |

An honorary mention goes out to those students that made themselves familiar with Zippers, for which the MC Jr Jr rewards them with a 🔍 of curiousity.

Even though some didn’t use Zippers in the end, you might still be interested in the model solution which can be improved by using Seq and applying the tricks we will come to shortly.

Altogether this would yield a good trade-off between elegance and performance.

Algorithmic tricks

Despite the importance of efficient data structures, the most significant part of a sudoku solver is the algorithm that powers it.

This is illustrated by the fact that the highest ranking student that uses data structures beyond lists only comes in sixth.

Starting with what will be called the basic approach, we will work our way up to best solution of this week’s Wettbewerb.

The basic approach is similar to the naive backtracking approach of the model solution but it comes with an important optimisation: instead of trying every number in each step and checking whether the sudoku remains valid, one just considers those numbers which do not appear in the current row, column, and square.

This cuts down the number of possibilities that the solver considers and removes calls to the expensive isValidSudoku function during backtracking.

The following piece of code by Herr Houcemeddine Ben Ayed shows how to calculate the possible numbers for a cell in the sudoku.

possibleAt xss (i,j) = [x | x <- [1..n], x `notElem` selectRow xss i,

x `notElem` selectColumn xss j,

x `notElem` selectSquare xss k]

where n = length xss

k = (i `div` sqrtn) * sqrtn + (j `div` sqrtn)

sqrtn = intRoot nAs you can see in the code sample, the row, column, and square is recomputed for each cell. Herr Flavio Principato recognised this so he optimised his solution to only recompute what is necessary: for example, if the solver advances from one cell to the next in the same row, the square is only recomputed if it is different from the previous cell. Of course, the column has to be recomputed in any case. In summary, with the exception of the Herren Simon Ouyang, David Berger, and Florian Hübler, everyone from rank 30 up to Marco Wenzel at rank 15 essentially implemented the basic approach. The other three almost managed to implement the constraint-based approach, which the MC Jr Jr will discuss next, but they fell short on execution: the first two recompute the constraints in each step while the MC Jr Jr isn’t really sure what went wrong in Herr Hübler’s solution as it is quite complicated.

In the constraint based approach, one precomputes the possible values for each cell in the sudoku and updates them accordingly during backtracking.

To do this, the empty cells of a sudoku where often represented as a list [(Int, Int), [Int]] where the first component of each pair represents the position of the cell in the sudoku and the last component is a list of the remaining possible values for that cell.

Since updating the constraints is expensive, some people such as Herr Malte Schmitz fell back to the basic approach if the number of empty cells falls below a certain threshold.

Additionally, this representation allows one to easily implement heuristics to speed up the solver.

One heuristic, which all of the constraint-based solutions use, is to consider the cell with the least number of possible values in each backtracking step.

To implement this, the function minimumBy comes in handy.

With this approach, one implicitly implements the common sudoku solving technique naked singles where you set the value of cell if there is only one possible value left.

Herr Max Schröder also searches for hidden singles in a preprocessing step in order to place a value in the cell of a row/column/square if there is only one possible position for it.

This seems to serve him quite well for the moderate sudokus where he placed first with a comfortable lead of around 60000 sudokus over the second place.

The MC Sr went even further and implemented heuristics such as X-Wings and XY-Wings.

If this sounds a bit too sci-fi for you, you might be right as the MC Sr wouldn’t have made his way onto the leaderboard this week.

Finally, Herr Jakob Florian Goes came in first this week by virtue of an unique preprocessing step: he sorts the possible values of each cell with respect to the frequency in all cells, highest frequency first.

With that, the values with highest frequency are tried first during backtracking thereby reducing the number of possible values in maximally many other cells.

This results in a solution that handles hard sudokus very well and is quite elegant to boot.

Conclusion

After the odyssey that was the evaluation, the MC Jr Jr was pleased to find a mixture of sometimes elegant, sometimes creative, and sometimes both elegant and creative solutions. It is remarkable that all of the top 30 students found a solution that easily outperforms the model solution and, even better, the solution by the MC Sr.

What did we learn this week?

- Linux command-line tools are powerful but, as you know, with great power comes great responsibility. Consequently, the MC Jr Jr should be more careful when editing bash scripts in the future.

- Use of appropiate data structures is important but, at least this week, a good algorithm beats a good data structure.

- Nobody really knows if the MC Jr Jr is a real Kapitän zur See or just likes to pretend.

- A little bit of preprocessing can go a long way.

Update: the MC Jr Jr managed to mess up the sorting for the second run. Correcting this lead to some changes in both this week’s and the whole semester’s leaderboard.

Final Scores Week 3

| Rank | Name | #solved 1 | Score 1 | #solved 4 | Score 4 | Final Score | Points |

|---|---|---|---|---|---|---|---|

| 🥇 | Jakob Florian Goes | 99072 | 29 | 7444 | 30 | 59 | 30 |

| 🥈 | Max Schröder | 160198 | 30 | 5791 | 27 | 57 | 29 |

| 🥉 | Kilian Mio | 96779 | 28 | 6127 | 28 | 56 | 28 |

| 🥉 | Adrian Reuter 🔍 | 85808 | 27 | 6441 | 29 | 56 | 28 |

| 5. | Maren Biltzinger | 77251 | 26 | 4352 | 26 | 52 | 26 |

| 6. | Robert Imschweiler | 69358 | 25 | 3290 | 25 | 50 | 25 |

| 7. | Luis Bahners | 60136 | 24 | 3251 | 24 | 48 | 24 |

| 8. | Benjamin Defant | 44816 | 22 | 2313 | 23 | 45 | 23 |

| 9. | Jalil Salamé Messina | 53168 | 23 | 667 | 19 | 42 | 22 |

| 10. | Sophia Knapp 🔍 | 17248 | 19 | 1407 | 22 | 41 | 21 |

| 11. | Florian Hübler | 18193 | 20 | 681 | 20 | 40 | 20 |

| 12. | Niklas Johne | 16324 | 18 | 767 | 21 | 39 | 19 |

| 13. | Malte Schmitz | 24096 | 21 | 339 | 16 | 37 | 18 |

| 14. | Eddie Groh | 14977 | 17 | 517 | 18 | 35 | 17 |

| 15. | Clara Mehler | 10040 | 13 | 421 | 17 | 30 | 16 |

| 16. | Flavio Principato | 10499 | 14 | 305 | 15 | 29 | 15 |

| 17. | Christoph Rotte | 12342 | 16 | 194 | 9 | 25 | 14 |

| Marco Menzel | 7600 | 12 | 235 | 13 | 25 | 14 | |

| 19. | Alexander Steinhauer | 6347 | 11 | 214 | 12 | 23 | 12 |

| 20. | Jonathan Bucher | 6014 | 10 | 200 | 11 | 21 | 11 |

| 21. | Mark Pylyavskyy 🔍 | 10802 | 15 | 177 | 3 | 18 | 10 |

| 22. | Andreas Papon | 5805 | 9 | 195 | 10 | 19 | 9 |

| 23. | Severin Schmidmeier | 5452 | 8 | 193 | 8 | 16 | 8 |

| 24. | Houcemeddine Ben Ayed | 2975 | 0 | 250 | 14 | 14 | 7 |

| 25. | Tianze Huang | 4840 | 7 | 181 | 6 | 13 | 6 |

| 26. | Björn Kremser | 4832 | 6 | 180 | 5 | 11 | 5 |

| 27. | Simon Longhao Ouyang | 4730 | 5 | 182 | 7 | 12 | 4 |

| 28. | David Berger | 4640 | 4 | 178 | 4 | 8 | 3 |

| 29. | Julian Hanuschek | 4357 | 3 | 167 | 2 | 5 | 2 |

| 30. | Thilo Linke | 4284 | 2 | 162 | 0 | 2 | 1 |

| Felipe Peter | 4175 | 1 | 164 | 1 | 2 | 1 |

Week 4

It’s a Christmas New Year’s Late-January miracle: The blog entry for week 4 of the Wettbewerb is finally here. The MC Jr Sr. apologizes profusely for the delay.

At least this fits in nicely with last week’s theme of the Odyssey, as you have surely all been waiting for this entry just as faithfully as Penelope did for her husband.

Evaluation

To remind ourselves, this week’s task was to parse a simplified version of XML in as few tokens as possible. This should have made the evaluation of the submissions trivial, if it were not for thorny issues of correctness. As usual, the MCs were not able to create a test suite that covered all edge cases, and so some some slightly incorrect solutions slipped past. In particular, there were two bugs that afflicted some of the students in the Top 30:

- Some submissions allowed spaces inside tags, if they only appear in either the opening or the closing tag, thus accepting strings like

< a></a>or<a></ a>. - Similarly, some competitors incorrectly sanitized

>or<inside tagnames, allowing e.g.<<a></<a>.

Since these problems are easily fixable with a couple of extra tokens, the MC felt it would be too harsh to exclude these submissions outright. Instead, he decided to let them off with a warning and a three point deduction. (The buggy submissions are marked with a 🐞 in the ranking)

With that out of the way, let’s have a look at some of the submissions.

Solutions

Ad-Hoc Approaches

Most submissions took an ad-hoc approach to parsing. The most popular way of going about this was to simply traverse the input and store any opened tags on a stack, popping them when the matching closing tag is encountered.

This certainly works, but also tends to produce code that is hard to read and maintain.

A good example of this approach is this lavishly commented solution by Herr Schröder, whose 114 tokens were good enough for 8th place.

The main reason why Herr Schröder fared somewhat better than most of his competitors is his extensive use of the Data.Text library, which provides a richer interface (and better performance) than plain strings, helping to save some tokens.

A slightly more structured way of going about the task is to extract the tags in one pass over the input, and then check whether they match and are well-formed in a separate step. This does not necessarily cost more tokens — the Top 10 contains examples of both approaches — and separating the two phases produces more pleasant code. A particularly readable example comes courtesy of Herr Steinhauer.

State Machines

The Herren Ouyang and Goes used state machines for their solutions. The states of their machine, e.g. whether we are currently inside an opening or closing tag, are encoded in an additional integer argument to their parsing function. If tokens were of no concern, defining a data type with descriptive constructor names for the states would be even better. Both students, however, made some unconventional variable name choices, thus sacrificing any readability gains the state machine approach could have afforded them. Here is Herr Goes’ submission:

xmlLight :: String -> Bool

xmlLight = f 0 []

f 0 s (c:cs) = c == '<' && f 1 ([] : s) cs || c `notElem` "<>" && f 0 s cs

f 1 s ('/':cs) = f 3 s cs

f q (a:s) (c:cs) | q < 4 && c `notElem` "< />" = f q ((a ++ [c]) : s) cs

f 3 (a:b:s)(c:cs) | c `elem` " >" = a == b && g c s cs

f _ s (c:cs) | c `elem` " >" = g c s cs

f q s _ = q == 0 && null s

g ' ' = f 4

g _ = f 0Herr Goes probably learned to program in the halcyon days when every byte was precious and variable names were strictly limited in length. Or perhaps he just needs a reminder that tokens ≠ characters. Herr Ouyang, on the other hand, has no such concerns and instead chose outlandishly long, though no more descriptive, names.

ReadP

The two best submissions this week, by Tobias Markus and Adrian Reuter, both used the ReadP parser combinator library, which is included in the base package. As Herr Markus notes, you have to scroll all the way down the module list to find ReadP, which might explain why it was not more popular.

Another possibility is the fact that ReadP uses 💀monads💀, which are notoriously scary and will only be covered at the very end of the lecture. For now, it suffices to know that the monadic nature of the operations in ReadP allows us to sequence them together using do-notation. Here is the 39 token solution of Herr Markus:

xmlLight :: String -> Bool

xmlLight = not . null . readP_to_S do

let innerP = satisfy (`notElem` "<>") +++ do

char '<'

id1 <- munch1 isAlphaNum

skipSpaces

char '>'

skipMany innerP

string "</"

string id1

skipSpaces

char '>'

skipMany innerP

eofAs a brief explanation, the innerP function parses one of two alternatives (connected by the +++ operator): either a string containing no tags, or a single pair of matching tags with a recursive call to innerP inside. The string and char functions look for the exact string/character they are given as an argument and fail if they do not encounter it, whereas the skip operations simply skip over as many occurrences of e.g. spaces as necessary.

The line id1 <- munch1 isAlphaNum reads as many alphanumeric characters as are available and remembers them in id1. Once the closing tag is encountered, string id1 then makes sure that the tagnames match.

Besides being shorter, more readable and easier to maintain and extend, using a parsing library should also offer better performance than any ad-hoc solutions.

The MC Sr’s solution also uses ReadP and coincidentally matches Herr Markus’s 39 tokens.

We should also note that there are far more popular parsing libraries than ReadP, like parsec and friends, which are more fully featured and offer better performance.

Addendum by the MC Sr: The MC Sr rejects the assertion that it was a coincidence that he had the same number of tokens as Herr Markus. Rather, Herr Markus had boasted about his 39-token solution on Zulip, and the MC Sr was spurred on by this since he could not allow himself to be outdone by a student yet again. So he toiled until he was able to match Herr Markus’s solution. (and, to his relief, Herr Markus did not respond with a 38-token solution – these arms races are exhausting!)

Conclusion

What did we learn this week?

- Comments are the key to any MC’s heart. In many of this week’s submissions, the MC failed to understand what was going on without them.

- Finding a happy medium between brevity and verbosity can be a challenge. (cf. Herren Ouyang and Goes)

- The right choice of library can allow you to have your cake and eat it too.

Final Scores Week 4

| Rank | Name | Tokens | Points |

|---|---|---|---|

| 🥇 | Tobias Markus | 39 | 30 |

| 🥈 | Adrian Reuter | 61 | 29 |

| 🥉 | Malte Schmitz | 85 | 28 |

| 4. | Florian Hübler | 94 | 27 |

| 5. | Simon Kammermeier | 96 | 26 |

| 6. | Felix Zehetbauer 🐞 | 103 | 22 |

| 7. | Paul Hofmeier | 104 | 24 |

| 8. | Max Schröder | 114 | 23 |

| 9. | Maren Biltzinger 🐞 | 117 | 19 |

| 10. | Georg Kreuzmayr | 124 | 21 |

| 11. | Sophia Knapp | 135 | 20 |

| 12. | Benjamin Defant | 140 | 19 |

| Nils Cremer 🐞 | 140 | 19 | |

| 14. | Tobias Schwarz | 143 | 17 |

| 15. | Julian Pritzi | 147 | 16 |

| 16. | Manuel Pietsch | 149 | 15 |

| 17. | Jakob Florian Goes | 150 | 14 |

| 18. | Max Lang | 153 | 13 |

| Robert Imschweiler | 153 | 13 | |

| 20. | Severin Schmidmeier | 155 | 11 |

| 21. | Mark Pylyavskyy | 163 | 10 |

| 22. | Christoph Rotte | 167 | 9 |

| 23. | Alexander Steinhauer | 169 | 8 |

| 24. | Flavio Principato | 174 | 7 |

| 25. | Xuemei Zhang | 177 | 6 |

| 26. | Matthias Jugan | 185 | 5 |

| 27. | Daniel Geiß 🐞 | 188 | 1 |